Inaccurate data isn’t just an annoyance; it’s a silent killer of revenue. From broken lead scoring in Salesforce to flawed forecasts in Snowflake, poor data quality costs real money and erodes trust in your entire GTM strategy. The problem is, data breaks silently. A botched API update, a manual import error, or a subtle schema change in a dbt model can go unnoticed for weeks, corrupting dashboards and derailing campaigns. That’s where data quality monitoring tools come in. They act as the automated immune system for your data stack, detecting issues before they impact downstream teams.

If you’re a RevOps leader tired of firefighting data issues or a data engineer responsible for the integrity of your company’s analytics, you’re in the right place. This isn’t just another generic list. We’re diving deep into 12 leading solutions to help you find the perfect fit for your specific tech stack and business needs.

In this guide, you’ll get a practical breakdown of each platform, including:

- Core features and real-world use cases for RevOps.

- Honest pros, cons, and potential limitations.

- Specific integration details for Salesforce, Snowflake, and dbt.

- Pricing signals and deployment models (SaaS, open-source, hybrid).

We’ve done the heavy lifting to provide a clear, comprehensive comparison so you can confidently choose the right tool to stop reacting to data fires and start building a reliable data foundation for growth. Each option includes screenshots and direct links to help you evaluate further. Let’s get started.

1. Monte Carlo Data

Monte Carlo is often seen as the pioneer of the data observability category, and for good reason. It offers a comprehensive, end-to-end platform designed to eliminate “data downtime” by monitoring data quality across your entire stack, from ingestion in the warehouse to consumption in BI tools. This is a powerful tool for teams who need to build trust in their data and resolve incidents before they impact downstream consumers like the RevOps or finance teams.

What makes Monte Carlo one of the leading data quality monitoring tools is its automated, machine-learning-driven approach. Instead of requiring you to manually define every possible rule, it learns your data’s normal patterns and alerts you when things deviate. This includes freshness, volume, schema, and distribution anomalies. For a deeper dive into this approach, you can learn more about data observability and its core pillars.

Key Details & Use Cases

- Best For: Mature data teams and enterprises needing a robust, all-in-one solution for data reliability across complex environments (Snowflake, dbt, Salesforce, etc.).

- Standout Feature: Its end-to-end lineage is incredibly valuable for root-cause analysis. You can quickly trace a broken dashboard back to a specific table change or failed pipeline job, drastically reducing troubleshooting time.

- Deployment: Cloud-based, with straightforward integration into major data warehouses, lakes, and BI platforms.

- Pricing: Quote-based. Procurement is sales-led, so you’ll need to engage with their team. This model is typical for enterprise-grade software but can be a hurdle for smaller teams wanting to self-serve.

- Pros: Very mature feature set, strong security and compliance options, and excellent lineage capabilities.

- Cons: Pricing isn’t transparent, and the comprehensive feature set might be overkill for small teams or startups just beginning their data quality journey.

Visit Website: https://www.montecarlodata.com

2. Bigeye

Bigeye is an enterprise-grade data observability platform that excels in providing deep, automated monitoring with an engineer-friendly workflow. It’s designed for data teams that want to manage data quality checks as code, offering robust tooling for both modern and legacy data stacks. The platform helps teams build and maintain trust in their data by proactively identifying and resolving issues before they impact business operations.

What sets Bigeye apart is its emphasis on developer-centric practices, such as its Bigconfig feature, which allows you to define and version-control data quality monitors using YAML. With over 60 prebuilt metrics and powerful anomaly detection, it automates much of the manual toil associated with ensuring data is accurate and reliable. For teams looking to integrate these checks directly into their workflows, it’s a compelling option among modern data pipeline monitoring tools.

Key Details & Use Cases

- Best For: Data engineering teams and organizations with hybrid (cloud and on-prem) data environments who prefer a configuration-as-code approach to data quality.

- Standout Feature: Its Bigconfig YAML-based monitoring-as-code allows for version control, collaboration, and programmatic management of data quality checks, which is a huge win for engineering-focused teams.

- Deployment: Cloud-based platform with connectors for both cloud and on-premise data sources, backed by enterprise security certifications.

- Pricing: Quote-based and sales-led. While not transparent, Bigeye is available on the AWS Marketplace, which can simplify procurement and billing for companies in the AWS ecosystem.

- Pros: Very engineer-friendly with its CLI and config-as-code workflows, strong column-level lineage for root cause analysis, and excellent support for complex, hybrid environments.

- Cons: Public pricing is not available, and its advanced features require a significant investment of time to properly model critical data assets and realize their full value.

Visit Website: https://www.bigeye.com

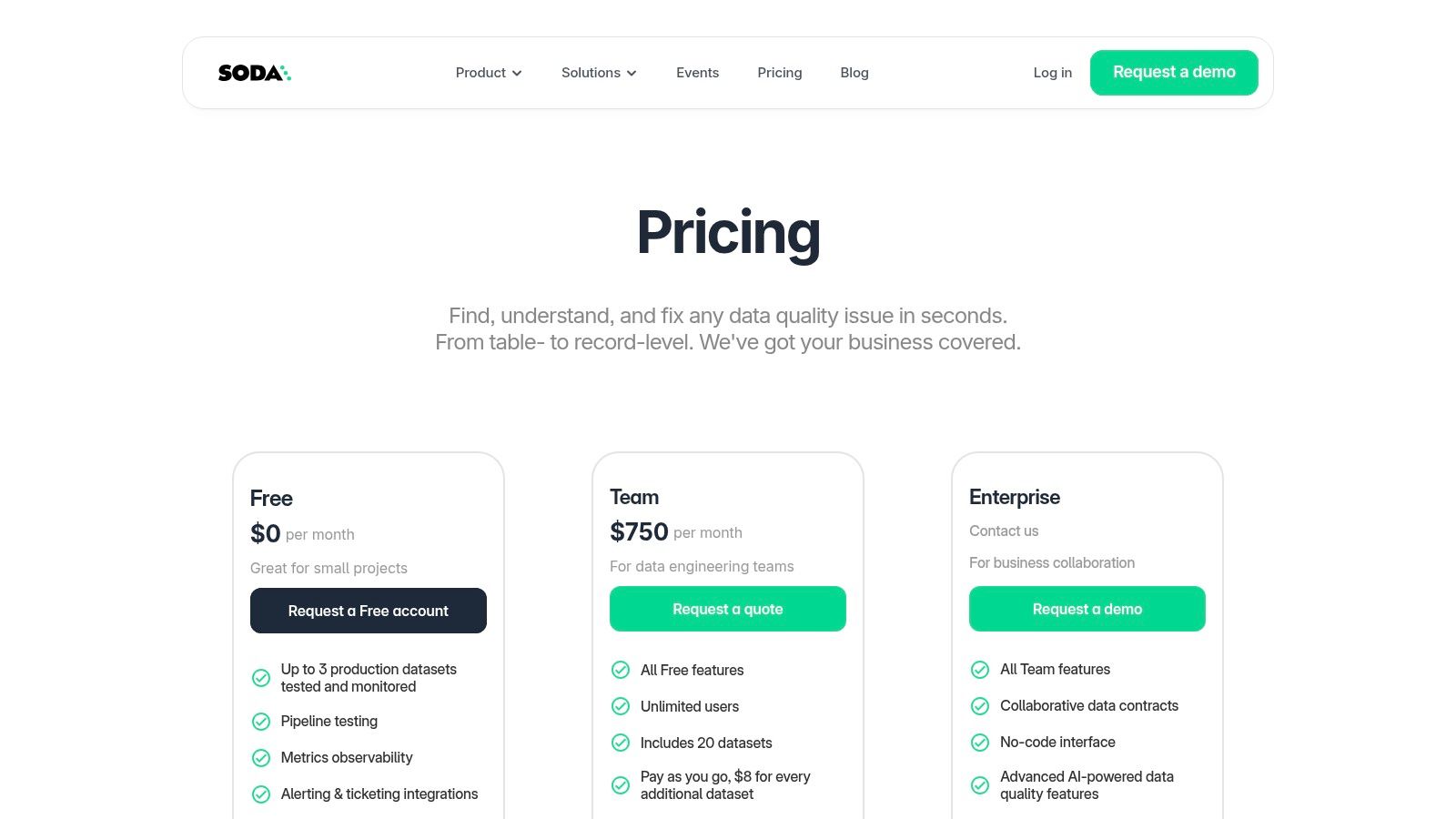

3. Soda (Soda Cloud + Soda Core)

Soda stands out in the data quality monitoring tools landscape with its developer-first approach and exceptionally transparent pricing model. It offers both an open-source framework (Soda Core) and a managed cloud platform (Soda Cloud), giving teams the flexibility to start small and scale. This dual offering allows engineers to define data quality checks as code, integrating testing directly into their data pipelines, while the UI provides a collaborative space for monitoring, alerting, and incident management.

What makes Soda particularly appealing is its accessibility. The combination of a powerful free tier and public pricing removes the barrier to entry that many teams face with enterprise-only tools. It’s an AI-native platform designed to help teams define tests, monitor datasets, and automate alerts with integrations into common tools like Slack and Jira, making it a practical choice for teams eager to implement data quality checks without a lengthy sales process.

Key Details & Use Cases

- Best For: Data engineering teams and startups that want to adopt a “data quality-as-code” methodology and require transparent, predictable pricing.

- Standout Feature: The clear and public pricing, including a generous free tier for up to three production datasets. This allows teams to get hands-on and prove value immediately without any financial commitment or sales calls.

- Deployment: Hybrid model. Soda Core is open-source and can be run anywhere, while Soda Cloud is a managed SaaS platform.

- Pricing: Publicly available on their website. Starts with a free plan, with paid tiers that add features like catalog integrations and more datasets. The per-dataset pricing model is straightforward.

- Pros: Very clear and accessible pricing model, strong “as-code” capabilities for engineers, and a quick time-to-value for smaller teams.

- Cons: The per-dataset pricing model can become costly for organizations with a very large number of tables to monitor, and certain enterprise features still require a sales-led conversation.

Visit Website: https://www.soda.io/pricing

4. Great Expectations (GX Cloud)

Great Expectations has long been a staple in the open-source world for asserting what your data should look like. GX Cloud is the commercial offering that builds on this powerful foundation, providing a managed, collaborative environment to deploy and scale these “Expectations.” It shifts the paradigm from anomaly detection to declarative, expectation-based testing, making it a powerful tool for teams who want to codify their data contracts directly into their pipelines.

What makes GX Cloud one of the most trusted data quality monitoring tools is its agent-based architecture and strong community roots. Instead of moving your data, a lightweight agent runs validations directly within your environment, which is excellent for security and performance. This approach is ideal for teams who have already adopted the open-source version and are looking to add enterprise-level features like role-based access control, a shared UI for validation results, and dedicated support without losing the flexibility of the core framework.

Key Details & Use Cases

- Best For: Teams already invested in the Great Expectations open-source ecosystem or those who prefer a code-first, declarative approach to data quality testing.

- Standout Feature: The “Expectations” themselves are the core feature. They are expressive, human-readable definitions of what your data must look like (e.g.,

expect_column_values_to_not_be_null), making data quality rules transparent and maintainable as code. - Deployment: Cloud-hosted UI and control plane with a self-hosted agent that connects to your data sources like Snowflake, BigQuery, and more.

- Pricing: A free tier is available for individuals. Paid Team and Enterprise tiers are quote-based and require engaging with their sales team for detailed pricing.

- Pros: Built on a widely adopted open-source standard, strong community support, and an agent architecture that validates data where it lives.

- Cons: Pricing for Team and Enterprise tiers isn’t public. The platform’s full power requires a solid governance process and a team comfortable with a code-centric workflow.

Visit Website: https://gxcloud.com

5. Datafold

Datafold takes a proactive, developer-centric approach to data quality, focusing on preventing issues before they ever reach production. Its core strength lies in “data diffing,” which allows you to compare datasets across different environments (like development and production) at the value level. This is a game-changer for teams using dbt or other transformation tools, as it integrates directly into your CI/CD pipeline to catch regressions and validate changes within pull requests automatically.

This “shift-left” philosophy means that Datafold is one of the best data quality monitoring tools for teams who want to embed quality checks directly into their development workflow. Instead of waiting for a monitor in production to fail, your analytics engineers get immediate feedback on how their SQL changes will impact the data, complete with a column-level lineage impact analysis. This dramatically speeds up development cycles and increases confidence in every deployment.

Key Details & Use Cases

- Best For: Data engineering and analytics teams who live in dbt and want to automate regression testing and validation within their CI/CD process. It’s also exceptional for validating large-scale data migrations.

- Standout Feature: The cross-database data diff is its killer feature. It shows you the exact value-level differences between two datasets, making it incredibly easy to debug a failing dbt model or validate that a refactored pipeline produces identical results.

- Deployment: Offers flexible deployment models, including a multi-tenant SaaS cloud, a single-tenant VPC, and on-premise options to meet various security and compliance requirements.

- Pricing: Quote-based and sales-led. You will need to contact their sales team for a demo and pricing information tailored to your usage.

- Pros: Excellent for preventing bad data from entering production, powerful CI/CD integration, and flexible deployment choices for enhanced security.

- Cons: Pricing is not transparent. For teams with already mature and extensive CI testing scripts, its core functionality might have some overlap.

Visit Website: https://www.datafold.com/pricing

6. Anomalo

Anomalo offers a no-code, machine-learning-powered platform designed to automatically monitor your enterprise data warehouse. Its primary goal is to catch data quality issues before they impact business intelligence dashboards or operational systems. By profiling your data and learning its normal patterns, Anomalo can detect unexpected anomalies without requiring extensive manual rule configuration.

What makes Anomalo an interesting choice among data quality monitoring tools is its ease of setup and its availability on the AWS Marketplace. This simplifies procurement for teams already embedded in the AWS ecosystem, allowing for consolidated billing and streamlined purchasing. The platform’s unsupervised approach to detecting missing data, nulls, and other anomalies means data teams can get value quickly without a massive initial investment in rule engineering.

Key Details & Use Cases

- Best For: Data teams looking for a low-code, ML-driven solution that is fast to implement and easy to procure through cloud marketplaces.

- Standout Feature: Its deep table monitoring provides a comprehensive check on data integrity with minimal configuration. You can point Anomalo at a table, and it immediately starts identifying potential issues and trends.

- Deployment: Cloud-based, integrating with major data warehouses like Snowflake, BigQuery, and Redshift.

- Pricing: Quote-based via direct sales or through AWS Marketplace private offers. This procurement path is a significant convenience for teams wanting to consolidate their cloud spending.

- Pros: Very fast time-to-value due to its automated, low-code nature. The AWS Marketplace option drastically simplifies procurement and billing for many organizations.

- Cons: The reliance on “black-box” machine learning for detection can sometimes require extra effort to explain the root cause of an alert to business stakeholders. Pricing is not publicly listed.

Visit Website: https://www.anomalo.com

7. Ataccama ONE

Ataccama ONE is an enterprise-grade platform that goes beyond simple monitoring, positioning itself as a unified solution for data quality, governance, lineage, and master data management. It’s built for large organizations that need to manage data quality across complex hybrid and multi-cloud environments. The platform emphasizes automation and high-performance processing by pushing down rule execution directly into source systems, making it a robust choice for handling significant data volumes.

What sets Ataccama apart is its all-in-one approach. Instead of buying separate tools for a data catalog, quality checks, and governance, Ataccama bundles these capabilities together. Its AI-powered features assist in rule creation and anomaly detection, which helps teams scale their data quality initiatives without a linear increase in manual effort. This makes it one of the more comprehensive data quality monitoring tools for enterprises standardizing their data management stack.

Key Details & Use Cases

- Best For: Large enterprises seeking a unified data management platform that combines data quality with governance, especially those operating in hybrid or multi-cloud setups.

- Standout Feature: The pushdown processing and reusable business rules allow for high-performance data quality checks that run on-premise or in the cloud without moving massive datasets, which is a major advantage for security and performance.

- Deployment: Hybrid and multi-cloud. It’s designed to be flexible and work where your data lives.

- Pricing: Quote-based. This is an enterprise solution requiring a sales-led procurement process.

- Pros: Truly unified platform for quality, catalog, and governance; strong performance at scale for hybrid/multi-cloud environments.

- Cons: The procurement process is sales-led and not transparent; the implementation effort can be significant and is often more complex than more focused observability tools.

Visit Website: https://www.ataccama.com/platform/data-quality

8. Talend Data Quality (Qlik Talend Cloud)

Talend has long been a major player in the data integration and ETL space, and its data quality capabilities are a core part of its offering, now within the Qlik Talend Cloud. This platform combines data quality, integration, stewardship, and governance into a unified environment. For companies already invested in the Qlik ecosystem for analytics, or those looking for a single vendor to handle multiple data management functions, Talend presents a compelling and consolidated option.

What makes Talend a unique entry among these data quality monitoring tools is its deep connection to data transformation and movement. Instead of just monitoring data at rest, Talend’s tools are designed to cleanse, standardize, and enrich data as it flows through pipelines. This proactive approach helps prevent bad data from ever reaching its destination. Its capabilities go beyond just monitoring, making it a powerful solution for those exploring the best data transformation tools in conjunction with quality management.

Key Details & Use Cases

- Best For: Organizations that need to embed data quality checks directly into their ETL/ELT processes and those already using or considering the Qlik analytics platform.

- Standout Feature: The platform’s ability to handle data quality as an integral part of the integration workflow is a key differentiator. It offers powerful data profiling, cleansing, and matching capabilities that can be built into data pipelines visually.

- Deployment: Flexible deployments are available, including cloud, on-premise, and hybrid models to suit different enterprise needs.

- Pricing: Primarily sales-led and quote-based. However, Qlik Talend Cloud offers a 14-day free trial, providing a great way to test the platform’s capabilities before committing.

- Pros: Extensive library of connectors, a very accessible free trial, and strong synergy for existing Qlik customers.

- Cons: The breadth of the suite can create a steeper learning curve, and pricing for the full data quality and integration feature set is not transparently public.

Visit Website: https://www.talend.com

9. Informatica (IDMC) Cloud Data Quality

Informatica is a long-standing titan in the enterprise data management space, and its Cloud Data Quality offering within the Intelligent Data Management Cloud (IDMC) is a testament to that legacy. It provides a suite of AI-assisted capabilities designed for large organizations that need to embed data quality within a broader governance framework. This is more than just a monitoring tool; it’s part of an integrated platform covering data cataloging, master data management (MDM), and pipeline observability.

What makes Informatica one of the most comprehensive data quality monitoring tools is its holistic approach. It automates rule generation based on continuous data discovery and profiling, providing clear quality scorecards that business users can understand. This is particularly powerful for organizations needing to enforce strict data governance policies, such as standardizing address verification or cleansing customer data before it enters a system like Salesforce.

Key Details & Use Cases

- Best For: Large enterprises and heavily regulated industries that require a unified data management platform with deeply integrated quality, governance, and cataloging features.

- Standout Feature: The seamless integration with its broader IDMC ecosystem is a key differentiator. A data quality issue flagged here can be traced through its catalog, governed by its policies, and mastered in its MDM hub, all within one platform.

- Deployment: Cloud-based (IDMC platform).

- Pricing: Utilizes a consumption-based model with trial paths available. However, understanding the full cost often requires engaging with their sales team, as the pricing details are not fully transparent on the website.

- Pros: Enterprise-grade depth with an extensive ecosystem of data management tools, and a flexible consumption-based pricing model.

- Cons: The platform’s breadth can be overwhelming and unnecessarily heavy for smaller teams, and getting a clear price requires contact beyond the headline model.

Visit Website: https://www.informatica.com/products/data-quality.html

10. Collibra

Collibra is a well-established leader in the data intelligence and governance space, offering a platform where data quality is a core component, not just an add-on. Its approach deeply integrates data quality monitoring with its broader data catalog, lineage, and policy management capabilities. This makes it an excellent choice for organizations that need to tie their quality rules directly to business context and governance frameworks.

What sets Collibra apart among data quality monitoring tools is its “pushdown” processing model. Instead of moving large datasets to a separate environment for analysis, it sends the quality checks directly to the source systems like Snowflake or Databricks. This method enhances performance, reduces data egress costs, and keeps sensitive data within its secure native environment, a critical consideration for enterprises with strict security postures.

Key Details & Use Cases

- Best For: Large enterprises and regulated industries that prioritize a governance-first approach to data management and already leverage or plan to adopt the broader Collibra platform.

- Standout Feature: The tight integration between the data catalog and data quality module. This allows you to define a data quality rule, link it to a specific business term in the catalog (e.g., “Active Customer”), and see its impact across all related data assets.

- Deployment: Cloud-based platform with connectors to major on-premise and cloud data sources.

- Pricing: Quote-based and sales-led. It is positioned as an enterprise-grade solution, so pricing reflects its comprehensive governance capabilities.

- Pros: A holistic, governance-native approach aligns quality with business policies, and its pushdown processing is excellent for security and performance.

- Cons: Pricing is not transparent and can be substantial. The platform’s true value is unlocked when using its full suite of governance tools, which might be too complex for teams solely focused on operational data quality monitoring.

Visit Website: https://www.collibra.com/us/en/products/data-intelligence-platform

11. AWS Marketplace (Data Quality Solutions)

For teams deeply embedded in the AWS ecosystem, the AWS Marketplace isn’t a tool itself but a strategic procurement channel. It acts as a centralized hub where you can discover, evaluate, and deploy a wide range of third-party data quality monitoring tools. This approach streamlines the often-arduous processes of vendor security reviews, contract negotiations, and billing, consolidating everything into your existing AWS account.

What makes the marketplace so effective is its integration with your company’s cloud budget and security posture. You can leverage private offers for custom pricing and terms, and often deploy solutions with a few clicks using predefined templates (like SaaS, AMI, or EKS). This removes significant friction for engineering and finance teams, allowing you to get a new data quality solution up and running much faster than traditional procurement paths.

Key Details & Use Cases

- Best For: Companies with an established AWS footprint looking to simplify the procurement and billing of data quality tools.

- Standout Feature: The ability to consolidate vendor billing directly into your AWS bill is a huge advantage for finance and procurement teams. It also allows you to burn down your AWS enterprise discount program (EDP) commitment.

- Deployment: Varies by vendor, but the marketplace supports multiple one-click deployment models, including direct SaaS subscriptions and private Amazon VPC deployments.

- Pricing: Vendor-specific. While the marketplace facilitates the transaction, pricing is set by each tool’s provider and often requires engaging with them for a private offer.

- Pros: Simplifies procurement, security reviews, and billing. It provides broad vendor coverage with standardized contract mechanisms.

- Cons: Feature vetting still requires individual trials or POCs. Pricing can be abstracted behind contract SKUs, so you still need to talk to the vendor.

Visit Website: https://aws.amazon.com/marketplace

12. G2 (Data Quality Tools Category)

While not a tool itself, G2’s Data Quality category is an indispensable resource for navigating the crowded market. It acts as a meta-tool for your research process, offering a crowdsourced, real-world perspective on how different platforms perform. By aggregating user reviews, it helps you cut through marketing hype and understand how tools are actually used day-to-day, which is crucial when evaluating various data quality monitoring tools for your specific needs.

Instead of just taking a vendor’s word for it, you can use G2’s grids and filters to create a shortlist based on company size, industry, or required features. This is a practical first step to identify which solutions genuinely resonate with users in situations similar to yours, helping you compare leaders, high performers, and niche players in one place.

Key Details & Use Cases

- Best For: Any team in the evaluation phase of selecting a data quality tool, from startups to enterprise buyers. It’s excellent for initial discovery and validating vendor claims.

- Standout Feature: The G2 Grid® for Data Quality offers a powerful visual snapshot of the market landscape, plotting tools based on user satisfaction and market presence. You can quickly see who the established leaders are versus emerging contenders.

- Deployment: N/A (It’s a web-based research platform).

- Pricing: Free to use for research. G2 makes money from vendors who pay for enhanced profiles and lead generation.

- Pros: Provides independent, aggregated user feedback to balance vendor-led messaging. The detailed filtering helps you narrow down a very long list of potential tools efficiently.

- Cons: Be mindful of potential sampling bias in reviews and note that some vendors pay for premium placements, which can influence visibility. It’s a starting point, not the final word.

Visit Website: https://www.g2.com/categories/data-quality

Top 12 Data Quality Monitoring Tools Comparison

| Product | Core focus / Key features | Target audience | Unique selling points | Pricing & procurement |

|---|---|---|---|---|

| Monte Carlo Data | Table/pipeline/BI monitoring, column/table/query lineage, incident management | Teams → enterprise data orgs needing full observability | Mature enterprise features, automated triage & root-cause analysis | Sales-led; pricing not public |

| Bigeye | Lineage-enabled monitoring, 60+ metrics, config-as-code (Bigconfig), hybrid connectors | Engineering teams, hybrid/complex stacks | CLI/version-control workflows, engineer-first monitoring-as-code | Sales engagement; marketplace purchasing option |

| Soda (Soda Cloud + Core) | Dataset tests, alerting, no-code UI + dev tooling, public pricing & free tier | Small to mid teams and data engineers | $0 starter tier, transparent pricing, quick time-to-value | Public pricing; per-dataset add-ons |

| Great Expectations (GX Cloud) | Expectations-based validation, RBAC, hosted agent, shared workspace | Teams using open-source GE and pipeline validation | Strong OSS foundation; agent validates data where it lives | Commercial tiers; team/enterprise via sales |

| Datafold | Cross-db value-level diffs, CI/CD integration, PR feedback & impact analysis | Migration projects, CI-driven engineering teams | Value diffs for catch-before-prod; automated PR comments | Sales-led; no public list price |

| Anomalo | ML-driven anomaly detection, deep table monitoring, easy setup | Teams wanting fast, low-code anomaly detection | Unsupervised checks; AWS Marketplace procurement | Contract pricing; available via AWS Marketplace |

| Ataccama ONE | Enterprise data quality, governance, pushdown processing, AI-assisted rules | Large enterprises with hybrid/multi-cloud needs | Unified DQ + governance at scale; pushdown/on-edge processing | Enterprise sales motion |

| Talend Data Quality (Qlik Talend Cloud) | Profiling, stewardship, governance, many connectors | Organizations using Qlik or broad connector needs | Broad connector ecosystem; easy 14-day trial | Sales-assisted pricing; free trial available |

| Informatica (IDMC) Cloud Data Quality | AI-assisted rule generation, profiling, cleansing, MDM integration | Enterprises needing end-to-end data management | Deep enterprise ecosystem; consumption pricing options | Consumption model; contact required for details |

| Collibra | Governance-native DQ, profiling, pushdown to Snowflake/Databricks, policy alignment | Organizations prioritizing governance + catalog | Tight catalog & policy integration; reduces data movement | Sales-led; enterprise-focused pricing |

| AWS Marketplace (Data Quality) | Centralized listings, private offers, one-click deploys, consolidated AWS billing | Procurement teams buying via AWS with consolidated billing | Simplifies procurement and billing; private offers & deployment options | Marketplace contracts; vendor-dependent pricing |

| G2 (Data Quality Category) | Crowdsourced reviews, category grids, filters, vendor profiles | Buyers shortlisting and validating vendor fit | Independent user feedback, rankings and sentiment insights | Free to use; directs to vendor sites for purchase |

Build, Buy, or Augment? Making the Right Data Quality Choice

We’ve journeyed through a comprehensive landscape of modern data quality monitoring tools, from comprehensive data observability platforms like Monte Carlo and Bigeye to the foundational open-source powerhouses of Soda and Great Expectations. Each tool offers a unique approach to a universal problem: how do we ensure the data driving our business is reliable, accurate, and trustworthy?

The core takeaway is that there’s no single “best” tool, only the right tool for your specific context. Your ideal solution depends heavily on your team’s technical maturity, the complexity of your data stack (especially the interplay between Salesforce, Snowflake, and dbt), and the business-critical nature of your data pipelines. A five-person startup has vastly different needs than a publicly-traded enterprise, and your choice should reflect that reality.

Key Takeaways and Actionable Next Steps

Making a confident decision requires moving from analysis to action. Here’s a simplified framework to guide your next steps:

-

Map Your “Data Jobs to Be Done”: Before you get mesmerized by feature lists, clearly define what you need a tool to do. Are you primarily fighting silent data errors in your Snowflake warehouse? Is your main goal to validate Salesforce data before it gets synced? Or do you need end-to-end lineage to trace a broken dashboard metric back to its source? Your answers will immediately narrow the field. For instance, teams heavily invested in dbt should give Soda and Great Expectations a serious look, while those needing automated, zero-configuration monitoring might lean toward Anomalo or Bigeye.

-

Evaluate the Total Cost of Ownership (TCO): The price tag is just the beginning. Factor in the engineering time required for implementation, integration, and ongoing maintenance. An open-source tool might be “free,” but it demands significant engineering overhead. Conversely, a managed platform like Monte Carlo or Ataccama ONE has a higher license cost but can reduce the internal workload. You must calculate the true cost in both dollars and team hours.

-

Run a Focused Proof of Concept (POC): Don’t make a decision based on demos alone. Select your top two or three contenders and run a time-boxed POC on a specific, high-impact data pipeline. Connect it to a real data source and a real business problem. This is the only way to genuinely assess a tool’s ease of use, the quality of its alerts, and how well it integrates with your existing workflows.

The Hidden Challenge: The Implementation Gap

Here’s a crucial insight we’ve learned from countless data projects: buying powerful data quality monitoring tools doesn’t automatically solve your problems. The biggest hurdle isn’t the tool; it’s the specialized engineering required to implement it effectively.

Even the most user-friendly platforms require someone to write production-grade code to connect data sources, configure custom monitors, fine-tune anomaly detection models, and integrate alerting into your team’s incident response workflow. This is where many data quality initiatives stall. Your data analysts are busy analyzing data, and your platform engineers are focused on core infrastructure, leaving a critical gap in the middle. Your team knows the “what” and the “why,” but gets stuck on the “how.”

This is precisely where augmenting your team with specialized expertise becomes a strategic advantage. Instead of viewing the decision as a simple build-versus-buy binary, consider a third path: buy and augment. You get the best-in-class technology from a dedicated vendor, paired with the on-demand engineering talent needed to ensure it delivers value from day one. This hybrid model allows your team to focus on leveraging trusted data to drive revenue, rather than getting bogged down in the complex mechanics of data plumbing and observability.

Ready to bridge the gap between buying a great tool and getting real value from it? RevOps JET provides on-demand, senior RevOps engineers to implement, configure, and manage your entire data quality stack. Learn how our fixed-cost, subscription-based service can help you achieve data reliability without the overhead of hiring. Visit RevOps JET to get started.