So, you’ve set up a process to get data from Salesforce into Snowflake. That’s a great first step, but it’s just the starting line. The real magic in Revenue Operations happens after the data lands, when you transform, enrich, and activate it. But what happens when your dbt model fails, a third-party API call hangs, or a sequence of tasks runs out of order? This is where your simple ELT script reveals its limits, and the need for robust data pipeline orchestration tools becomes crystal clear.

These tools are the conductors of your data orchestra. They don’t just move data; they manage the entire workflow, ensuring every step runs in the correct sequence, handling failures gracefully, and providing the visibility you need to trust your numbers. Think of an orchestrator as the central nervous system for your RevOps data stack, coordinating complex dependencies between various systems and ensuring your BI dashboards and revenue-critical automations are always powered by fresh, reliable data.

This guide is designed to help you navigate the crowded market of orchestrators. We’ll break down 12 of the top managed and open-source data pipeline orchestration tools, moving beyond marketing fluff to focus on what matters for a modern RevOps team. For each tool, you’ll find a clear analysis of its strengths, weaknesses, ideal use cases, and how well it slots into a Salesforce-to-Snowflake ecosystem. We’ve included direct links and screenshots to help you make an informed decision without the guesswork. Let’s find the right orchestrator to build the resilient, production-grade pipelines your business deserves.

1. Apache Airflow

As the undisputed heavyweight champion in the open-source world, Apache Airflow is a powerful, mature platform for programmatically authoring, scheduling, and monitoring workflows. It stands out by defining all pipelines as Directed Acyclic Graphs (DAGs) in Python, giving data engineers granular control and the full power of a general-purpose programming language to define complex logic. This “pipelines as code” approach is its defining feature, enabling version control, collaboration, and automated testing that you won’t find in UI-driven tools.

For a RevOps team with engineering talent, Airflow is a fantastic choice for orchestrating custom Salesforce to Snowflake pipelines. You can use its vast library of community-contributed “operators” to connect to virtually any API or data source. This flexibility makes it one of the most versatile data pipeline orchestration tools available.

Core Strengths & Limitations

Pros:

- No License Costs: Being open-source, you only pay for the infrastructure it runs on.

- Massive Ecosystem: A huge community means an extensive library of pre-built integrations (operators) and abundant support resources.

- Ultimate Flexibility: Define any workflow you can imagine in Python, making it perfect for bespoke, complex data logic.

Cons:

- High Operational Overhead: You are responsible for deploying, managing, scaling, and upgrading the entire infrastructure, which requires significant DevOps expertise.

- Steep Learning Curve: Mastering Airflow’s concepts like schedulers, workers, and executors at production scale can be challenging for teams new to data engineering.

Its robust nature and proven track record in enterprise environments make it a go-to option. You can discover more about its capabilities in our complete guide to the best data orchestration tools.

Website: https://airflow.apache.org

2. Astronomer Astro

For teams that love the power and flexibility of Airflow but want to sidestep the operational headache of managing it, Astronomer Astro is the answer. It’s a fully managed platform that provides Apache Airflow as a service, abstracting away the complex infrastructure so your data team can focus on writing DAGs, not managing Kubernetes clusters. Astro streamlines deployment, offers high-availability options, and adds enterprise-grade controls like SSO and dedicated support.

This approach dramatically speeds up time-to-value for organizations adopting Airflow. A RevOps team can leverage its existing Python skills to orchestrate complex data flows between Salesforce, marketing platforms, and Snowflake without needing dedicated DevOps resources. As one of the leading managed data pipeline orchestration tools, Astro provides the best of both worlds: open-source flexibility with the convenience of a SaaS product.

Core Strengths & Limitations

Pros:

- Reduced Operational Burden: Offloads all Airflow infrastructure management, including upgrades, scaling, and security, freeing up engineering time.

- Faster Time-to-Value: Enables teams to start building and running pipelines almost immediately with day-zero support for the latest Airflow versions.

- Enterprise-Ready: Comes with critical business features like single sign-on (SSO), role-based access control (RBAC), and service level agreements (SLAs).

Cons:

- Variable Cost: The usage-based pricing model for deployments and workers requires careful monitoring and governance to control spend.

- Potential Vendor Lock-in: While it runs open-source Airflow, heavy reliance on Astro-specific platform features could make future migrations more complex.

It’s the ideal choice for businesses committed to the Airflow ecosystem but prefer a managed service to accelerate development and ensure production-grade reliability.

Website: https://www.astronomer.io

3. Prefect

Prefect positions itself as a modern, Python-native workflow orchestration platform that prioritizes a phenomenal developer experience. Where some tools require significant infrastructure knowledge upfront, Prefect allows engineers to easily convert any Python function into a trackable, retriable task. This “code as workflows” approach feels incredibly intuitive and streamlines the process from local development to production deployment, offering a gentler learning curve than many alternatives.

Its hybrid deployment model is a key differentiator; you can run the open-source orchestration engine on your own infrastructure while leveraging the Prefect Cloud UI for monitoring and collaboration. For a RevOps team building custom integrations between Salesforce and Snowflake, this means you can get started quickly without managing a full-stack platform, making it one of the most accessible data pipeline orchestration tools for Python-savvy teams.

Core Strengths & Limitations

Pros:

- Developer-Friendly: Its Pythonic API and excellent local development experience make it a favorite among data engineers and scientists.

- Flexible Deployment: Run your agents anywhere (cloud, on-prem) while using the managed SaaS UI, giving you both control and convenience.

- Observability Built-In: The UI provides rich, real-time insights into flow runs, logs, and task states out of the box.

Cons:

- Evolving Pricing: The pricing model has changed over time, so teams should carefully evaluate the current plans to ensure they fit their budget and scale.

- Advanced Features Gated: Enterprise necessities like SSO, RBAC, and audit logs are typically reserved for higher-priced paid tiers.

The platform’s focus on observability also complements a robust operational strategy, which you can explore in our guide to data pipeline monitoring tools.

Website: https://www.prefect.io

4. Dagster

Dagster presents a modern, asset-centric approach to orchestration that shifts the focus from tasks to the data assets they produce, like tables, reports, or machine learning models. This unique perspective provides exceptional observability and data lineage out of the box. Instead of just knowing a task ran, you know that a specific, versioned, and tested data asset was created. It’s written in Python and designed to be developer-friendly, offering a clean UI and a clear programming model.

For RevOps teams building complex data flows, Dagster’s asset model makes it easier to track how a change in a Salesforce object impacts a downstream Snowflake table or dbt model. Its managed service, Dagster+, provides a SaaS platform that handles the infrastructure, making it one of the more accessible data pipeline orchestration tools for teams wanting to avoid DevOps overhead while maintaining code-based control.

Core Strengths & Limitations

Pros:

- Predictable Entry-Level Pricing: The Dagster+ SaaS offering has clear, published self-serve tiers, making it easy for smaller teams to get started without a hefty enterprise commitment.

- Improved Lineage and Observability: The asset-centric model is a game-changer for understanding data dependencies, debugging issues, and ensuring data quality across your stack.

- Modern Developer Experience: It offers a great local development workflow, built-in testing capabilities, and a slick user interface that engineers appreciate.

Cons:

- Credit-Based Usage Model: While predictable, the SaaS pricing is based on credits, which requires teams to monitor consumption to avoid unexpected overage fees as pipelines scale.

- Potential Refactoring Required: Migrating from traditional task-based DAG tools like Airflow may require a significant mindset shift and code refactoring to fit the asset model.

Website: https://dagster.io

5. Kestra

Kestra is a modern, open-source orchestration platform that uniquely combines declarative YAML-based workflows with a user-friendly UI. This hybrid approach aims to bridge the gap between technical and non-technical teams, allowing engineers to define pipelines as code while enabling business users to visualize, trigger, and understand data flows. It is language-agnostic, meaning you can orchestrate scripts written in Python, SQL, R, or any other language without being tied to a specific ecosystem.

For RevOps teams, Kestra’s plugin-based architecture and easy-to-use interface make it a compelling choice. You can quickly configure a pipeline to extract data from Salesforce, load it into Snowflake, and then trigger a dbt transformation, all from a visual editor or a simple YAML file. This flexibility makes it one of the most approachable data pipeline orchestration tools for teams that need both engineering power and business accessibility.

Core Strengths & Limitations

Pros:

- Declarative & Visual: The dual YAML and UI approach makes it accessible to a wider range of roles, from developers to data analysts.

- Flexible Deployment: Run it anywhere, on-premises or in any cloud, giving you full control over your data and infrastructure.

- Extensive Plugin Ecosystem: With over 900 plugins, it offers strong out-of-the-box connectivity for a wide array of sources and destinations.

Cons:

- Smaller Community: As a newer player, its community and third-party resources are less extensive than established tools like Airflow.

- Enterprise Pricing Model: Access to advanced governance and security features requires the Enterprise edition, which is priced via a sales-led process.

Website: https://kestra.io

6. Flyte (Union.ai)

Flyte is a powerful, Kubernetes-native workflow automation platform specifically engineered for large-scale data processing and machine learning pipelines. Its core strength lies in its emphasis on reproducibility and reliability, ensuring that every pipeline execution is versioned, auditable, and recoverable. While it has deep roots in ML, its scalable architecture makes it a strong contender for any complex data orchestration needs.

For a data-savvy RevOps team, Flyte provides the robust foundation needed for durable ETL jobs, like syncing massive datasets between Salesforce and Snowflake. Its ability to checkpoint and recover failed tasks automatically is a huge asset for mission-critical revenue data. This makes it one of the more resilient data pipeline orchestration tools for teams prioritizing stability over simplicity.

Core Strengths & Limitations

Pros:

- Built for Reliability: First-class support for checkpointing, retries, and caching makes pipelines highly fault-tolerant and reproducible.

- Kubernetes-Native Scalability: Leverages Kubernetes to scale tasks dynamically, efficiently using cluster resources for demanding workloads.

- Clear Managed Path: You can start with the open-source version and seamlessly transition to the managed Union.ai cloud offering as your needs grow, avoiding operational headaches.

Cons:

- Requires Kubernetes Expertise: Self-hosting Flyte demands a solid understanding of Kubernetes, which can be a significant hurdle for teams without DevOps skills.

- ML-Centric Focus: While versatile, its documentation and community often lean towards machine learning use cases, which may require some translation for pure data integration scenarios.

Flyte’s focus on durability makes it an excellent choice for teams that can’t afford data pipeline failures. You can learn more about the principles behind these types of systems in our guide on how to build a data pipeline.

Website: https://flyte.org

7. Argo Workflows

For teams that live and breathe Kubernetes, Argo Workflows is a powerful, container-native workflow engine. Unlike tools that simply run on Kubernetes, Argo is built for it, defining workflows as Kubernetes Custom Resource Definitions (CRDs). This deep integration allows you to orchestrate complex sequences of containerized jobs with incredible efficiency, making it a perfect fit for modern, cloud-native data and machine learning pipelines.

This Kubernetes-centric approach means you can containerize a specific data transformation step (like a Python script for Salesforce data cleanup) and orchestrate it alongside other containerized tasks as part of a larger Directed Acyclic Graph (DAG). This makes Argo one of the most resource-efficient data pipeline orchestration tools for organizations already invested in the container ecosystem.

Core Strengths & Limitations

Pros:

- Kubernetes-Native: Runs on any Kubernetes cluster, whether on-prem or in the cloud, leveraging native features like secrets and resource management.

- Efficient Parallelism: Excellent at running highly parallelized, container-based ETL jobs, scaling pods up and down as needed.

- Mature & Open-Source: A graduated CNCF project with a strong community, client SDKs, and a clean UI for workflow visualization.

Cons:

- Requires Kubernetes Expertise: You are responsible for managing the underlying Kubernetes cluster, which comes with significant operational overhead.

- Smaller Operator Ecosystem: Its library of pre-built integrations is not as extensive as Airflow’s, often requiring you to build custom container images for your tasks.

Website: https://argoproj.github.io/workflows/

8. Amazon Managed Workflows for Apache Airflow (MWAA)

For teams already invested in the AWS ecosystem, Amazon Managed Workflows for Apache Airflow (MWAA) offers a compelling proposition: all the power of open-source Airflow without the headaches of managing the underlying infrastructure. MWAA handles the provisioning, scaling, and maintenance of Airflow schedulers and workers, allowing your data engineers to focus purely on writing Python DAGs. This managed service provides the familiar Airflow UI and API, ensuring a smooth transition for those with existing experience.

This service is ideal for a RevOps team using AWS for its data warehouse (like Redshift or Snowflake on AWS) and other data services. Because it integrates natively with AWS Identity and Access Management (IAM), you can securely grant your DAGs permission to interact with S3, EC2, and other resources. This makes it one of the most streamlined data pipeline orchestration tools for organizations committed to AWS.

Core Strengths & Limitations

Pros:

- Reduced Operational Overhead: Eliminates the need for DevOps expertise to deploy, scale, and patch Airflow infrastructure on AWS.

- Seamless AWS Integration: Built-in security and connectivity with the entire AWS service suite, simplifying data movement and transformation tasks.

- Familiar Airflow Experience: Provides the standard Airflow interface, allowing teams to leverage existing skills and community resources without a steep learning curve.

Cons:

- Vendor Lock-in: Tightly coupled with the AWS ecosystem, making it less suitable for multi-cloud or hybrid-cloud strategies.

- Potential for High Costs: The pay-as-you-go model can become expensive for environments that require constant uptime or a large number of concurrent workers.

Website: https://aws.amazon.com/managed-workflows-for-apache-airflow/

9. Azure Data Factory

For organizations deeply invested in the Microsoft Azure ecosystem, Azure Data Factory (ADF) is the natural choice for cloud-native data integration and orchestration. It’s a fully managed, serverless service that allows you to visually compose data transformation and movement workflows or define them using JSON. ADF excels at connecting disparate data sources both on-premises and in the cloud, making it a powerful tool for building enterprise-grade ETL and ELT pipelines.

Its primary strength lies in its seamless integration with other Azure services like Azure Synapse Analytics, Azure Databricks, and Azure Blob Storage. For a RevOps team running its data warehouse on an Azure stack, ADF can orchestrate Salesforce to Synapse pipelines with native connectors and serverless compute that scales automatically. This makes it one of the most cohesive data pipeline orchestration tools for Azure-centric businesses.

Core Strengths & Limitations

Pros:

- Native Azure Integration: Unparalleled connectivity and performance within the Azure ecosystem, simplifying infrastructure management.

- Visual & Code Options: The drag-and-drop UI accelerates development for simpler pipelines, while code-based authoring provides control for complex logic.

- Serverless Scaling: Pay-as-you-go pricing and automatic scaling mean you only pay for what you use without managing servers.

Cons:

- Azure Lock-in: Its tight coupling to the Azure environment makes it less ideal for multi-cloud or cloud-agnostic strategies.

- Complex Pricing: The pricing model, with separate costs for pipeline orchestration, data movement, and data flow execution, can be difficult to predict and manage.

Website: https://azure.microsoft.com/en-us/products/data-factory/

10. Google Cloud Composer

For teams already invested in the Google Cloud Platform (GP) ecosystem, Google Cloud Composer offers a compelling proposition: Apache Airflow without the operational headaches. It’s a fully managed workflow orchestration service that handles the underlying infrastructure, allowing you to focus purely on building your DAGs. Composer automates the creation of Airflow environments, including scaling workers, patching, and maintaining the core components.

This makes it an excellent choice for a RevOps team using BigQuery as their data warehouse instead of Snowflake. The native integration with other GCP services like Cloud Storage, Dataflow, and BigQuery is seamless, simplifying authentication and connectivity. It provides the power of Airflow with the convenience of a managed cloud service, making it one of the most practical data pipeline orchestration tools for GCP users.

Core Strengths & Limitations

Pros:

- Reduced Operational Toil: Google manages the Airflow infrastructure, freeing your team from complex DevOps tasks like scaling and patching.

- Deep GCP Integration: Effortlessly connect to and orchestrate jobs across the entire Google Cloud suite of products.

- Autoscaling: Environments can automatically scale workers based on workload, optimizing cost and performance without manual intervention.

Cons:

- Platform Lock-in: The service is deeply tied to the GCP ecosystem, making it less suitable for multi-cloud or hybrid environments.

- Complex Pricing: Costs are based on multiple SKUs including compute, storage, memory, and environment fees, which can be difficult to forecast.

Website: https://cloud.google.com/composer

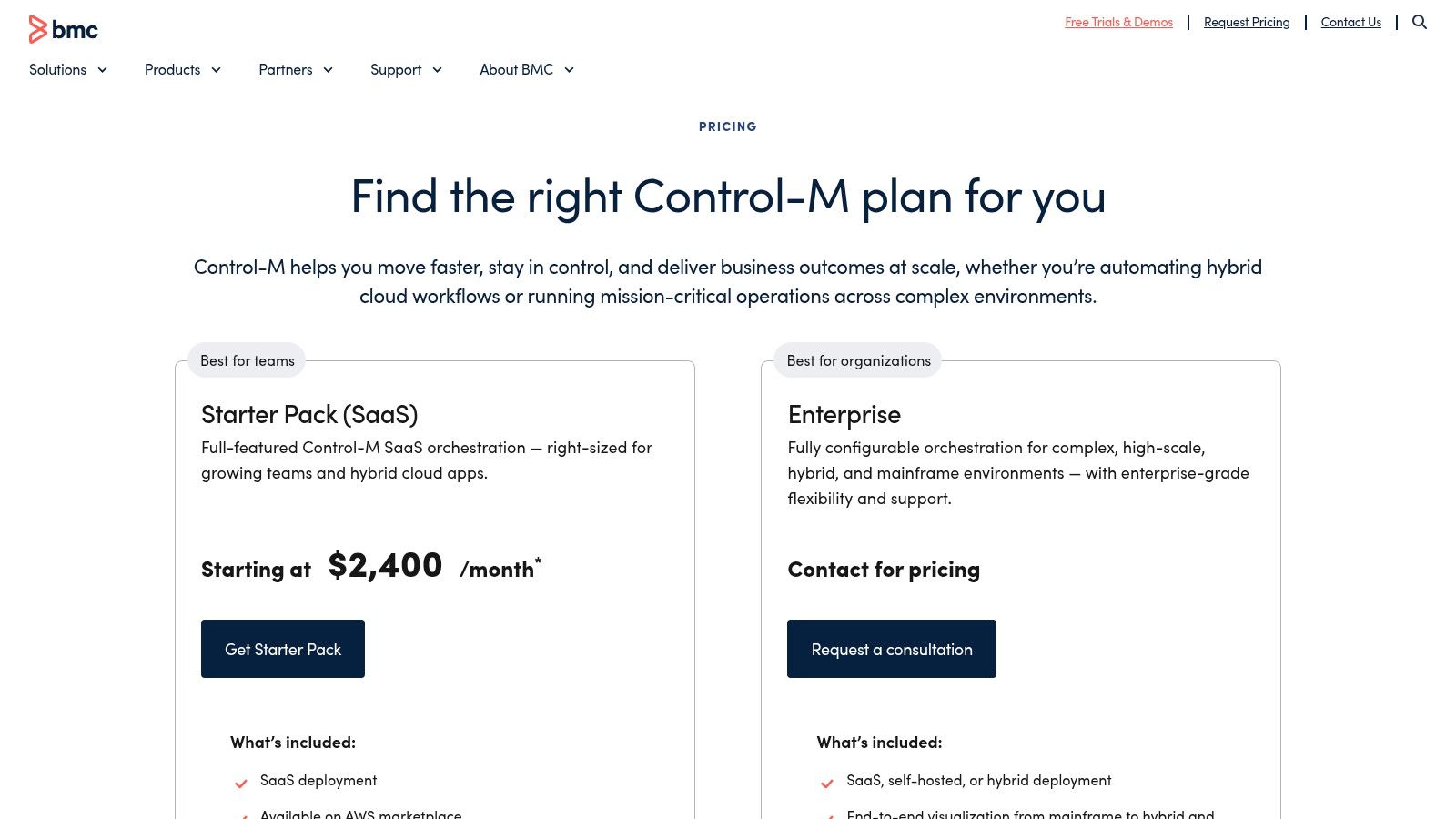

11. BMC Control-M

For organizations with deeply entrenched, complex, and heterogeneous IT environments, BMC Control-M is an enterprise-grade workload automation and orchestration platform that has been a trusted name for decades. It excels at managing workflows across a vast landscape, from legacy mainframes to modern cloud services. Unlike many cloud-native newcomers, its core strength is providing a single point of control and observability over business applications and data pipelines that span hybrid and multi-cloud infrastructure.

This platform is not typically the first choice for a startup’s RevOps team but is often a mandated standard in large enterprises. If your Salesforce and Snowflake pipelines must integrate with legacy ERP or on-premise systems, Control-M provides the robust connectors and governance needed. It’s one of the few data pipeline orchestration tools designed to handle this level of complexity with features like advanced SLA management and managed file transfer.

Core Strengths & Limitations

Pros:

- Enterprise-Grade Governance: Provides extensive observability, robust security, and global support designed for large-scale, mission-critical operations.

- Handles Extreme Heterogeneity: Uniquely suited for managing workflows across mainframe, on-premise, and multi-cloud environments from a single platform.

- SaaS and Self-Hosted: Offers both a SaaS option for a managed experience and a self-hosted version for maximum control.

Cons:

- High Entry Price: Procurement is typically handled through enterprise sales contracts, making it a significant investment compared to more modern tools.

- Legacy Complexity: Can feel overly complex and less agile for teams accustomed to nimble, code-first, open-source orchestrators.

Website: https://www.bmc.com/it-solutions/control-m-pricing.html

12. AWS Marketplace – Apache Airflow with Astro by Astronomer

For companies deeply embedded in the AWS ecosystem, procuring software through the AWS Marketplace simplifies billing and procurement immensely. This listing for Astro, Astronomer’s managed Airflow platform, allows organizations to purchase one of the best data pipeline orchestration tools using their existing AWS accounts and potentially leverage committed spend. It’s not a different product than Astro itself, but rather a different purchasing and deployment channel tailored for AWS-native enterprises.

This approach streamlines the process for RevOps teams whose finance departments prefer consolidated vendor management and billing through a single provider. You get all the benefits of a managed Airflow service- like reduced operational overhead and expert support- while keeping the commercial relationship neatly tucked under your AWS agreement. This is a strategic choice for simplifying vendor onboarding and budget allocation.

Core Strengths & Limitations

Pros:

- Simplified Procurement: Consolidates billing directly into your AWS invoice, making it easier for finance and procurement teams to manage.

- Leverage AWS Spend: Allows companies to use their existing AWS Enterprise Discount Program (EDP) or other committed use discounts toward Astro.

- Private Offers: Supports custom pricing and terms through AWS Marketplace Private Offers, enabling tailored enterprise agreements.

Cons:

- Marketplace Specifics: Terms, quotas, and available pricing plans can sometimes differ slightly from a direct purchase and should be reviewed carefully.

- No Product Difference: The underlying platform trade-offs, like usage-based pricing, are the same as purchasing Astro directly; this is purely a procurement channel.

Website: https://aws.amazon.com/marketplace/pp/prodview-mjdamtyyfhbao

Data Pipeline Orchestration — 12-Tool Comparison

| Product | Deployment & Integrations | Developer UX & Observability | Value Proposition | Target Audience | Pricing Notes |

|---|---|---|---|---|---|

| Apache Airflow | Self-hosted; Python DAGs; broad operators for AWS/GCP/Azure | Rich UI; mature ecosystem; production ops learning curve | Full control and no license fees; enterprise-proven | Teams wanting DIY control and extensible integrations | OSS (no license); infra/ops costs apply |

| Astronomer Astro | Managed Airflow clusters; SSO/SCIM; cloud integrations | Airflow UI + managed upgrades and enterprise support | Offloads Airflow ops to speed time-to-value | Teams who want Airflow without managing infra | Usage-based pricing; variable spend; marketplace option |

| Prefect | Open-source engine + SaaS/enterprise; Python-first APIs | Very Pythonic local dev; good monitoring; some features paid | Developer-friendly orchestration with flexible deployment | Python teams seeking local DX or SaaS convenience | Free tier; per-developer/usage pricing for paid tiers |

| Dagster | Asset-oriented orchestration; dbt/ELT/ML integrations; Dagster+ SaaS | Strong lineage & observability; modern developer UX | Asset-centric pipelines that improve lineage and observability | Analytics/ML teams needing asset tracking | Published tiers; credit-based usage can incur overages |

| Kestra | Declarative workflows; runs on-prem or cloud; 900+ plugins | Hybrid code + no-code UI; business-friendly tooling | Flexible deployments with rich plugin ecosystem | Teams needing hybrid UI and many integrations | OSS (Apache 2.0); Enterprise edition via sales |

| Flyte (Union.ai) | Kubernetes-native; open-source core; managed by Union.ai option | Built for reproducibility, checkpointing and recovery | Durable, reproducible pipelines for data/ML workloads | K8s-savvy ML/data engineering teams | OSS core; managed/pay-as-you-go pricing for hosted service |

| Argo Workflows | Kubernetes CRD-based; container-first; supports S3/GCS/Blob artifacts | CLI + UI; efficient for parallel containerized ETL | Best for containerized pipelines on Kubernetes | Teams standardized on Kubernetes and containers | OSS; Kubernetes infra costs apply |

| Amazon MWAA | Managed Apache Airflow on AWS; deep AWS integrations | Airflow UI with autoscaling workers and AWS security | Removes Airflow ops when running on AWS | AWS-centric teams needing managed Airflow | Pay-as-you-go environment hours; costs grow with workers |

| Azure Data Factory | Serverless orchestration; visual pipelines; 90+ connectors | Visual authoring; telemetry and regional compliance | Fast onboarding and native fit for Azure data stack | Azure-centric enterprises and analytics teams | Pay-as-you-go across orchestration, movement, data-flow SKUs |

| Google Cloud Composer | Managed Airflow on GCP; autoscaling workers | Managed infra with Airflow UI; GCP integrations | Managed Airflow tightly integrated with GCP services | GCP-focused teams running Airflow workloads | Metered by DCU-hours (compute/memory/storage); multiple SKUs |

| BMC Control-M | Enterprise SaaS or self-hosted; hybrid/multi-cloud & mainframe support | Enterprise governance, SLA mgmt, 24×7 support and observability | Enterprise-grade orchestration for large heterogeneous estates | Large enterprises with legacy systems and global ops | Higher entry price; procurement via sales/contracts |

| AWS Marketplace – Apache Airflow (Astro) | Astronomer Astro sold via AWS Marketplace; runs in AWS regions | Same managed Astro experience; consolidated billing via AWS | Easier procurement and billing consolidation for AWS shops | Enterprises preferring marketplace procurement & billing | Marketplace pay-as-you-go/annual plans; same platform spend as Astro |

Making the Right Choice for Your RevOps Engineering Needs

And there you have it, a deep dive into the world of data pipeline orchestration tools. We’ve journeyed from the open-source powerhouses like Apache Airflow and Argo to the sleek, managed cloud offerings from Prefect, Dagster, and Astronomer. It’s clear that the “best” tool isn’t a one-size-fits-all answer; it’s a strategic choice that hinges entirely on your team’s unique DNA.

Choosing the right orchestrator is less about picking the one with the most features and more about honest self-assessment. Does your team have the engineering firepower and desire to manage Kubernetes clusters for Argo or fine-tune Airflow workers? If so, the unmatched flexibility of open-source might be your perfect match. Or, are you a lean RevOps team where every minute spent on infrastructure is a minute stolen from driving revenue strategy? In that case, the managed, serverless, and hybrid options from Astro, Prefect Cloud, or Dagster+ are likely your fast lane to success.

Key Takeaways for Your RevOps Stack

As you weigh your options, especially for that critical Salesforce-to-Snowflake pipeline, keep these core principles at the forefront of your decision-making process:

- Total Cost of Ownership (TCO) is King: Don’t just look at the monthly subscription fee. Factor in the hidden costs of engineering time for setup, maintenance, upgrades, and troubleshooting. An open-source tool might be “free,” but the salary of the engineer dedicated to keeping it running certainly isn’t. Managed services often provide a more predictable and ultimately lower TCO.

- Developer Experience Dictates Adoption: A tool can be incredibly powerful, but if it’s cumbersome for your team to use, your pipelines will be brittle and slow to evolve. Features like local testing environments, intuitive UIs for non-engineers, and CI/CD integrations (like Dagster’s branching or Prefect’s projects) are not just nice-to-haves; they are critical for team velocity.

- Observability is Non-Negotiable: For RevOps, a failed pipeline isn’t just a technical glitch; it’s a potential hit to sales forecasts, marketing campaigns, or commission payouts. Your chosen tool must provide robust, real-time monitoring, granular logging, and intelligent alerting. You need to know why a Salesforce API call failed and have a system that can automatically retry or escalate the issue before it impacts the business.

Your Actionable Next Steps

Feeling overwhelmed? Don’t be. Start small. Pick two or three tools that seem like the best fit based on our analysis and run a proof-of-concept (POC).

- Define a Simple, High-Value Use Case: Choose one specific pipeline, like syncing Salesforce Opportunity data to a Snowflake staging table.

- Timebox Your Evaluation: Give your team a set period, perhaps one or two sprints, to build that same pipeline in each of your chosen tools.

- Measure What Matters: Evaluate each tool against your key criteria. How long did it take to get the first pipeline running? How easy was it to debug an error? What was the experience like for both your data engineers and your RevOps analysts?

This hands-on approach will quickly cut through the marketing jargon and reveal which of these data pipeline orchestration tools truly aligns with your team’s workflow, technical skills, and business objectives. Your goal is a reliable, scalable system that makes your data work for you, not the other way around.

Tired of weighing infrastructure trade-offs and just want reliable, production-grade data pipelines that connect your RevOps stack? The expert team at RevOps JET specializes in building and managing the exact kind of robust, orchestrated data systems discussed in this article, freeing you to focus on strategy. See how our on-demand engineering service can accelerate your data initiatives at RevOps JET.