In today’s RevOps and data environments, simply moving data isn’t enough. You need to coordinate, schedule, and monitor complex workflows with precision. Think of it like an orchestra: without a conductor, you get noise. With one, you get a symphony. Data orchestration tools are that conductor for your data stack, ensuring every pipeline runs on time, failures are handled gracefully, and your Salesforce, Snowflake, and dbt models work in perfect harmony.

But with a crowded market of open-source frameworks, managed services, and enterprise platforms, which one is right for your team? Choosing the wrong tool can lead to brittle pipelines, mounting technical debt, and a frustrated team. How do you find the one that fits your specific needs, whether you’re a lean RevOps team managing CRM data or a large data engineering group orchestrating enterprise-wide workflows?

This guide cuts through the noise. We’re diving deep into the 12 best data orchestration tools, evaluating each on the features that truly matter for modern data teams. We’ll cover everything from DAG scheduling and Change Data Capture (CDC) support to idempotency, observability, and critical API integrations. We’ll also look at the tradeoffs between managed services and open-source solutions.

Our goal is to help you find the perfect fit. For each tool, you’ll find a detailed analysis, pros and cons, screenshots, direct links, and clear recommendations based on common use cases. Whether you’re wrangling Salesforce data, scaling with Snowflake, or just starting to build out your data practice, you’ll find the answers you need right here to select the best platform for your pipeline.

1. Apache Airflow

When it comes to the best data orchestration tools, Apache Airflow is often the first name that comes to mind, and for good reason. It’s the open-source industry standard for programmatically authoring, scheduling, and monitoring workflows. For data and RevOps teams, this means you can define complex data pipelines as code using Python, giving you immense flexibility and control.

Airflow’s core concept is the Directed Acyclic Graph (DAG), which allows you to set up tasks and their dependencies in a clear, logical sequence. This is perfect for multi-step RevOps processes, like syncing customer data from Salesforce to a data warehouse, running transformations with dbt, and then pushing enriched data into a marketing automation platform. For instance, you can orchestrate a pipeline that triggers only when a new lead meets certain criteria, directly impacting your KPIs for lead generation.

Key Differentiators & Considerations

The biggest draw for Airflow is its vibrant community and massive ecosystem of pre-built integrations, called “Providers.” Need to connect to Snowflake, AWS S3, Google BigQuery, or Salesforce? There’s a provider package for that, which significantly speeds up development.

However, its power comes with a tradeoff. As a self-hosted tool, your team is responsible for managing the underlying infrastructure, handling upgrades, and ensuring security. This operational overhead can be steep, especially for smaller teams without dedicated DevOps or data engineering resources.

| Feature | Apache Airflow |

|---|---|

| Model | Open-Source (Apache 2.0 License) |

| Cost | Free (Infrastructure costs apply) |

| Core Language | Python |

| Key Strength | Unmatched flexibility & huge community |

| Best For | Teams with engineering resources who need ultimate control and customization. |

Website: https://airflow.apache.org/

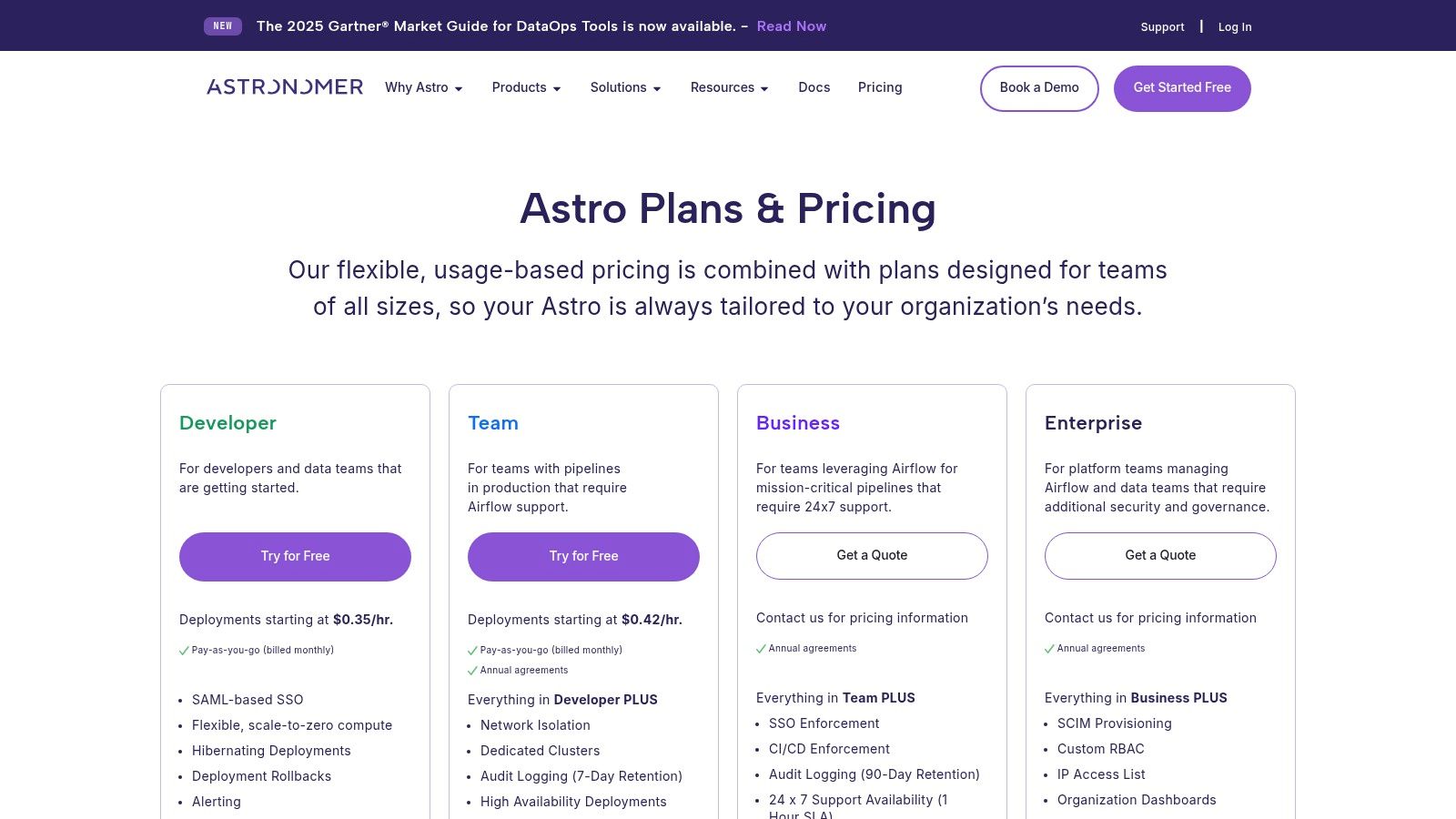

2. Astronomer (Astro) – Managed Airflow

If you love the power of Apache Airflow but want to avoid the operational headache of managing it yourself, Astronomer is your answer. It provides a fully managed, enterprise-grade Airflow experience on your cloud of choice (AWS, GCP, or Azure). For RevOps teams, this means you can leverage one of the best data orchestration tools without needing a dedicated DevOps team, focusing instead on building pipelines that drive revenue.

Astro streamlines the entire Airflow lifecycle, from local development with their CLI to production deployment with features like CI/CD integration and deployment rollbacks. This is ideal for a RevOps workflow where you might need to quickly deploy a new DAG to sync updated lead scoring logic from your data warehouse back to Salesforce, test it, and roll back if it doesn’t perform as expected.

Key Differentiators & Considerations

The key benefit of Astro is that it’s Airflow, supercharged. You get day-zero support for the latest Airflow releases, autoscaling workers to handle spiky workloads, and robust security features like SSO and audit logging. This provides the stability and scalability often required for critical revenue-generating pipelines.

The tradeoff is cost. While its usage-based pricing is transparent and granular, it can become more expensive than a self-hosted setup for very large, continuously running workloads. However, for most teams, the price is a worthwhile exchange for the reduced operational burden, faster time-to-value, and expert support.

| Feature | Astronomer (Astro) |

|---|---|

| Model | Managed Open-Source |

| Cost | Usage-based pricing (Starts with a free tier) |

| Core Language | Python |

| Key Strength | Enterprise-grade managed Airflow with expert support. |

| Best For | Teams who want the power of Airflow without the operational overhead. |

Website: https://www.astronomer.io/pricing/?utm_source=openai

3. Google Cloud Composer

For teams already invested in the Google Cloud Platform, Google Cloud Composer offers a compelling path to managed data orchestration. It’s essentially Apache Airflow as a fully managed service, removing the significant operational burden of hosting and maintaining the infrastructure yourself. This lets your RevOps and data teams focus on building pipelines rather than managing servers, making it one of the best data orchestration tools for GCP-native environments.

The primary advantage of Cloud Composer is its deep, native integration with the GCP ecosystem. You can effortlessly orchestrate jobs across BigQuery, Dataflow, Dataproc, and Cloud Storage, making it perfect for complex pipelines that leverage multiple Google services. For example, you could trigger a workflow to process new user event data from Google Analytics, load it into BigQuery for analysis, and then push segmented audiences to Google Ads. This direct integration streamlines operations and reduces the complexity of tracking user behavior, which can be a key factor when analyzing metrics like your bounce rate in Google Analytics.

Key Differentiators & Considerations

Composer’s managed nature is its key selling point. Google handles the upgrades, security patching, and scaling of your Airflow environment, which is a massive relief for teams without dedicated DevOps support. You get the power of Airflow without the headache of managing it.

However, this convenience comes with a few caveats. The pricing model can be complex, with separate charges for compute, storage, and environment fees that require careful monitoring to manage costs. Furthermore, its strength is its GCP-centricity; connecting to services outside the Google ecosystem, like AWS or Azure, is possible but often requires more configuration and effort than using a more cloud-agnostic tool.

| Feature | Google Cloud Composer |

|---|---|

| Model | Managed Service (Based on Open-Source Airflow) |

| Cost | Usage-based (Compute, storage, env. fees) |

| Core Language | Python |

| Key Strength | Deep GCP integration & managed infrastructure |

| Best For | Teams building data pipelines primarily within the Google Cloud ecosystem. |

Website: https://cloud.google.com/composer

4. Amazon MWAA (Managed Workflows for Apache Airflow)

For teams already invested in the AWS ecosystem, Amazon MWAA offers a compelling proposition. It takes the power and flexibility of Apache Airflow and removes the heavy lifting of infrastructure management. As a managed service, AWS handles the provisioning, scaling, and maintenance of the Airflow environment, allowing your data and RevOps teams to focus on building pipelines instead of managing servers.

This makes it one of the best data orchestration tools for organizations that want Airflow’s capabilities without the associated DevOps overhead. You get native integration with AWS services like S3, Redshift, and Lambda, all secured within your own Virtual Private Cloud (VPC) and managed through AWS Identity and Access Management (IAM). This tight integration is perfect for orchestrating tasks like loading files from S3 into a data warehouse or triggering Lambda functions as part of a larger data workflow.

Key Differentiators & Considerations

The primary advantage of MWAA is its seamless integration with the AWS stack and its simplified operational model. The auto-scaling workers ensure you have the right amount of compute power for your workloads, helping to manage costs effectively. Setup can be handled through the AWS Console, CLI, or CloudFormation, providing flexibility for different team workflows.

However, its strength is also its limitation. MWAA is inherently AWS-centric, and orchestrating processes across multi-cloud environments can require additional configuration and effort. While the pricing is transparent and based on usage, costs for large, continuously running environments can accumulate, so it’s important to monitor your usage closely.

| Feature | Amazon MWAA |

|---|---|

| Model | Managed Service (Based on Open-Source) |

| Cost | Per-hour usage-based pricing |

| Core Language | Python |

| Key Strength | Managed Airflow with deep AWS integration |

| Best For | Teams heavily invested in AWS who want Airflow’s power without the operational burden. |

Website: https://aws.amazon.com/managed-workflows-for-apache-airflow/

5. Azure Data Factory (ADF)

For teams heavily invested in the Microsoft ecosystem, Azure Data Factory (ADF) is one of the best data orchestration tools available. It’s a fully managed, serverless cloud data integration service that excels at building ETL and ELT pipelines. RevOps teams can use its low-code, drag-and-drop visual interface to quickly create data workflows without writing extensive code.

ADF is particularly powerful for orchestrating data movements and transformations within Azure. You can easily build a pipeline that pulls contact data from an on-premise SQL Server, copies it to Azure Blob Storage, and then uses a Databricks activity to run a cleansing script before loading it into Azure Synapse Analytics. This seamless integration makes it a go-to choice for organizations leveraging Microsoft’s cloud platform.

Key Differentiators & Considerations

ADF’s biggest strength is its native integration with the entire Azure stack. With over 90 built-in connectors, connecting to sources like Azure SQL, Cosmos DB, and even third-party services like Salesforce is straightforward. It also provides flexibility through its Integration Runtimes, allowing you to execute data flows within Azure’s managed network or on your own self-hosted infrastructure for hybrid scenarios.

The main consideration is its pricing model, which is highly granular and based on activity runs, data movement, and pipeline orchestration. While this pay-as-you-go approach can be cost-effective for infrequent jobs, costs can become complex and unpredictable for high-frequency, complex pipelines. The UI-first approach, while user-friendly, can also feel less flexible than pure code-first frameworks for teams with deep engineering expertise.

| Feature | Azure Data Factory |

|---|---|

| Model | Managed Cloud Service |

| Cost | Pay-as-you-go (based on usage) |

| Core Language | Visual UI (with JSON, .NET, Python options) |

| Key Strength | Deep integration with the Azure ecosystem |

| Best For | Organizations heavily using Microsoft Azure services that prefer a low-code, visual workflow builder. |

Website: https://azure.microsoft.com/en-us/pricing/details/data-factory/ssis?utm_source=openai

6. Prefect (open-source + Prefect Cloud)

If Airflow represents the traditional standard, Prefect embodies the modern, Python-native approach to data orchestration. It’s a strong contender for the best data orchestration tools, especially for teams who prioritize developer experience and a more intuitive, code-first workflow. For RevOps, this translates to faster pipeline development and easier maintenance when building complex logic.

Prefect’s design treats failures as a natural part of any data pipeline, offering robust features for retries, caching, and state management right out of the box. This is incredibly useful for RevOps tasks that can be flaky, like syncing large datasets from a CRM or triggering actions based on real-time API responses. You can easily build a pipeline that pulls customer usage data, runs a model, and flags at-risk accounts, directly supporting your efforts in predicting customer churn. The modern UI provides excellent observability into flow runs and automations.

Key Differentiators & Considerations

Prefect’s killer feature is its flexible execution model. You can run the open-source engine entirely on your own infrastructure or use the managed Prefect Cloud control plane while your code executes on your own “workers” (the hybrid model). This gives you the observability and convenience of a SaaS tool without ever sending sensitive data outside your environment.

The main consideration involves the feature gating between the open-source version and the paid Prefect Cloud tiers. Advanced features like RBAC, SSO, and automations are part of the paid plans. Teams should evaluate the pricing tiers and rate limits to ensure the chosen plan aligns with their expected scale and governance needs.

| Feature | Prefect |

|---|---|

| Model | Open-Source (Apache 2.0) & Managed Cloud |

| Cost | Free (OSS), Cloud has free & paid tiers |

| Core Language | Python |

| Key Strength | Excellent developer experience & hybrid model |

| Best For | Python-centric teams wanting a modern UI and flexible deployment options. |

Website: https://www.prefect.io/

7. Dagster & Dagster+ (Dagster Cloud)

Dagster shifts the focus from traditional task-based pipelines to a more modern, asset-centric approach. Instead of just orchestrating tasks, you orchestrate the creation and maintenance of “software-defined assets” like tables, reports, or machine learning models. This makes it one of the best data orchestration tools for teams who want to build reliable, maintainable, and observable data platforms.

This asset-first philosophy provides incredible context. For RevOps, this means you can easily see the full lineage of a KPI dashboard, tracing it back from the visualization tool, through dbt transformations, all the way to raw data from Salesforce. This built-in observability and data cataloging helps you understand dependencies and troubleshoot issues much faster than with traditional orchestrators.

Key Differentiators & Considerations

Dagster’s biggest differentiator is its developer experience and the clarity that comes from its asset-based model. It’s designed for local development and testing, making it easier to write robust data pipelines. The UI is also a major plus, offering rich visualization of asset lineage and operational metadata out of the box.

The managed version, Dagster+, provides a serverless, zero-ops experience with a credit-based pricing model that can be advantageous for teams wanting to avoid infrastructure management. However, this model requires some upfront estimation to manage costs effectively. While the open-source version is powerful, scaling and managing it requires dedicated engineering resources, similar to Airflow.

| Feature | Dagster & Dagster+ |

|---|---|

| Model | Open-Source & Managed Cloud |

| Cost | Free (OSS) / Credit-based (Cloud) |

| Core Language | Python |

| Key Strength | Asset-centric orchestration & observability |

| Best For | Data-aware teams who value lineage, testability, and a strong developer workflow. |

Website: https://dagster.io/pricing

8. Argo Workflows

For teams living and breathing in the Kubernetes ecosystem, Argo Workflows emerges as one of the best data orchestration tools available. As a CNCF open-source project, it is purpose-built to orchestrate parallel jobs on Kubernetes, making it a natural fit for container-native data processing and machine learning pipelines. Every task in an Argo workflow runs as its own container, providing ultimate isolation and environment consistency.

Workflows are defined as Kubernetes Custom Resource Definitions (CRDs), which means your data pipelines are managed just like any other Kubernetes object. This is a huge win for RevOps and data teams who have already standardized their infrastructure on Kubernetes. You can define complex Directed Acyclic Graphs (DAGs) in YAML, orchestrating tasks like pulling data from an API, running a dbt transformation in a container, and then pushing the results to a destination.

Key Differentiators & Considerations

Argo’s core strength is its deep integration with Kubernetes. It scales with your cluster, leverages native Kubernetes features for scheduling and secrets management, and is completely cloud-agnostic. The UI provides excellent visualization for DAGs, making it easy to monitor and debug complex pipeline executions.

The main consideration is that it requires a solid understanding of Kubernetes. Your team is responsible for managing the cluster, installing Argo, and handling all operational aspects. This isn’t a managed SaaS tool; it’s a powerful engine for organizations that have committed to a container-first infrastructure and have the DevOps expertise to support it.

| Feature | Argo Workflows |

|---|---|

| Model | Open-Source (Apache 2.0 License) |

| Cost | Free (Kubernetes cluster costs apply) |

| Core Language | YAML (for definitions) |

| Key Strength | Kubernetes-native orchestration |

| Best For | Teams with strong Kubernetes expertise running data and ML workloads in containers. |

Website: https://argoproj.github.io/workflows/

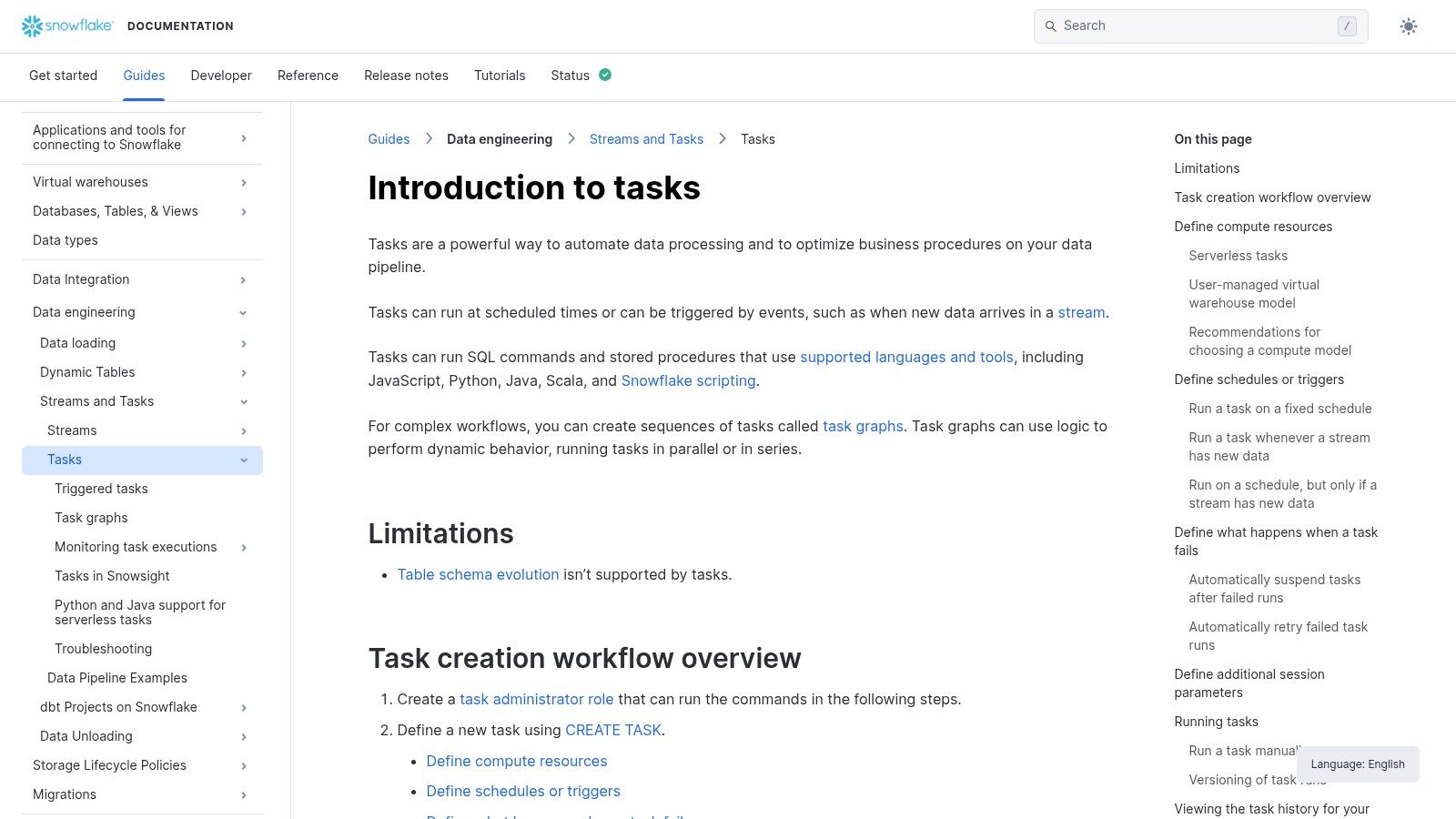

9. Snowflake Tasks / Task Graphs (Snowflake Workflows)

For teams living and breathing within the Snowflake ecosystem, sometimes the best data orchestration tools are the ones built right into the platform you already use. Snowflake Tasks and Task Graphs offer native orchestration capabilities, allowing you to schedule and execute SQL statements, stored procedures, and Snowpark code without ever leaving your data cloud environment. This is a game-changer for RevOps teams whose data transformation and enrichment logic primarily resides inside Snowflake.

With Task Graphs, you can define Directed Acyclic Graphs (DAGs) directly in Snowflake to manage complex dependencies. Imagine a workflow that ingests raw lead data, runs a series of SQL transformations to clean and score it, and then executes a Python stored procedure to match leads to accounts. All of this can be orchestrated natively, triggering on a schedule or based on data stream events, keeping your entire pipeline tightly coupled with your data.

Key Differentiators & Considerations

The primary advantage of Snowflake Tasks is the elimination of a separate control plane. There’s no external scheduler to manage, secure, or pay for. Everything is integrated, simplifying your architecture and leveraging Snowflake’s robust security and governance model. This tight integration is especially powerful when using Snowpark for complex Python, Java, or Scala logic.

However, its strength is also its limitation. This solution is purpose-built for orchestrating work within Snowflake. If your pipelines involve numerous external systems, APIs, or services outside the data cloud, you’ll still need a more general-purpose orchestrator like Airflow or Prefect. Pricing is also a key consideration, as tasks run on serverless compute, and costs are tied directly to your Snowflake consumption.

| Feature | Snowflake Tasks / Task Graphs |

|---|---|

| Model | Native feature within Snowflake |

| Cost | Consumption-based (Snowflake credits) |

| Core Language | SQL, Python, Java, Scala, JavaScript |

| Key Strength | Zero-management orchestration inside Snowflake |

| Best For | Teams whose data pipelines run almost entirely within the Snowflake ecosystem. |

Website: https://docs.snowflake.com/en/user-guide/tasks-intro

10. BMC Control-M

For enterprise-level organizations that require robust governance and service level agreement (SLA) management, BMC Control-M is a heavyweight contender among the best data orchestration tools. It’s a mature platform designed for orchestrating complex application and data workflows across hybrid environments, from on-premise mainframes to multi-cloud services. For RevOps, this means you can reliably connect legacy systems with modern cloud data stacks, ensuring critical processes like financial reporting or customer data synchronization meet strict business deadlines.

Control-M’s strength lies in its end-to-end management capabilities. You can define, schedule, and monitor intricate job flows through a graphical interface, which can be a significant advantage for teams with mixed technical skill sets. It’s built for mission-critical operations, offering features like high availability, disaster recovery, and predictive SLA monitoring to alert you of potential delays before they impact the business.

Key Differentiators & Considerations

The primary draw for Control-M is its enterprise-grade feature set. It boasts a vast library of integrations, strong security controls, and sophisticated scheduling options that go beyond simple time-based triggers. Its Jobs-as-Code approach allows developers to define workflows within their CI/CD pipelines, blending agility with centralized operational control.

This power, however, comes at a price. The total cost of ownership is considerably higher than open-source alternatives, and its extensive feature set might be overwhelming for smaller teams or simpler data pipeline needs. It’s a tool designed for organizations where the cost of a failed data process is extremely high and operational resilience is paramount.

| Feature | BMC Control-M |

|---|---|

| Model | Commercial (SaaS & On-Premise) |

| Cost | Paid (SaaS Starter Pack pricing available) |

| Core Language | GUI-based with Jobs-as-Code (JSON, REST API) |

| Key Strength | Enterprise-grade governance, SLA management & reliability |

| Best For | Large organizations with complex, hybrid-cloud workflows and strict compliance requirements. |

Website: https://www.bmc.com/it-solutions/control-m-pricing.html?utm_source=openai

11. IBM DataStage

For enterprises deeply invested in robust, governed data integration, IBM DataStage stands out as a powerful, feature-rich platform. As one of the more traditional and comprehensive best data orchestration tools, it goes beyond simple task scheduling to offer a complete ETL/ELT design environment. For RevOps teams in large organizations, this means you can build, manage, and monitor complex data pipelines with built-in data quality and metadata management, ensuring high levels of trust and compliance.

DataStage excels in hybrid and multi-cloud environments, offering deployment flexibility through its SaaS offering on IBM Cloud Pak for Data or as a platform edition. This allows you to orchestrate data flows that span on-premise systems like a legacy ERP and modern cloud warehouses like Snowflake. A RevOps team could use it to pull sensitive customer financials from an on-premise database, cleanse and transform it according to strict governance rules, and load it into a cloud data mart for analysis.

Key Differentiators & Considerations

The primary strength of DataStage is its all-in-one, enterprise-grade capability set. The parallel processing engine and elastic scaling features are designed for high-volume, mission-critical workloads, which is often overkill for smaller teams but essential for large-scale operations. It provides a visual, flow-based design canvas that can be more approachable for users who prefer a low-code interface over Python-based scripting.

The trade-off for this power is higher cost and complexity compared to open-source alternatives. DataStage provides the most value for large enterprises, particularly those already utilizing other IBM products. Its extensive features come with a steeper learning curve and a premium price tag, making it less suitable for startups or teams needing a lightweight, agile solution.

| Feature | IBM DataStage |

|---|---|

| Model | Commercial (SaaS & Platform Editions) |

| Cost | Paid (Tiered plans, contact sales) |

| Core Language | Low-Code/Visual & Scripting |

| Key Strength | Enterprise-grade governance & data quality |

| Best For | Large enterprises needing a governed, scalable, and feature-complete ETL/ELT solution. |

Website: https://www.ibm.com/products/datastage

12. AWS Marketplace (data orchestration listings)

For teams deeply embedded in the AWS ecosystem, the AWS Marketplace isn’t a data orchestration tool itself, but rather a strategic procurement hub to find and deploy them. It offers a streamlined way to discover, subscribe to, and manage various orchestration solutions, including managed Airflow providers like Astronomer and pre-configured AMIs. This approach significantly simplifies billing and vendor management for RevOps teams already leveraging AWS.

The primary benefit is consolidating your software spend onto your existing AWS bill. This is a huge win for finance and procurement departments, as it leverages established enterprise agreements and private offers. Instead of onboarding a new vendor, you can subscribe to a powerful orchestration platform with a few clicks, making it one of the most efficient ways to acquire the best data orchestration tools if you are an existing AWS customer.

Key Differentiators & Considerations

The marketplace model excels at speed and convenience. You can find both fully managed SaaS subscriptions and self-hosted AMI listings, giving you flexibility in your deployment model. The ability to use private offers can also lead to customized pricing and terms that aren’t publicly available.

However, the convenience comes with a need for due diligence. The quality of listings, vendor support, and Service Level Agreements (SLAs) can vary widely. It is crucial to vet each provider thoroughly before committing. Naturally, as an AWS-native platform, its options are almost exclusively focused on services that integrate well within the AWS cloud, making it less suitable for multi-cloud or on-premise strategies.

| Feature | AWS Marketplace |

|---|---|

| Model | B2B Marketplace (SaaS & AMI listings) |

| Cost | Varies by vendor (Pay-As-You-Go, Annual) |

| Core Language | N/A (Platform Agnostic) |

| Key Strength | Consolidated AWS billing & fast procurement |

| Best For | AWS-centric teams looking to simplify vendor management and billing. |

Website: https://aws.amazon.com/marketplace/

Top 12 Data Orchestration Tools: Feature Comparison

| Platform | Core features | Best for / Target audience | Value proposition / Pros | Pricing / Cost notes |

|---|---|---|---|---|

| Apache Airflow | Python-native DAGs, provider ecosystem, web UI | Code-first teams wanting open-source orchestration | Flexible, large community, cloud-agnostic | Free (Apache 2.0) + infra/ops costs |

| Astronomer (Astro) | Managed Airflow, autoscaling, enterprise controls | Teams wanting managed Airflow with SLAs & support | Fast path to prod, day‑zero support, rollbacks | Usage-based; can be expensive at scale |

| Google Cloud Composer | Managed Airflow with deep GCP integrations | GCP-centric pipelines (BigQuery, Dataflow, Pub/Sub) | Managed infra and upgrades, native GCP hooks | Milli-DCU hours + env fees; multi-part pricing |

| Amazon MWAA | Managed Airflow with AWS security/hooks | AWS-focused teams needing IAM/VPC integration | Native AWS security, documented autoscaling | Per-hour environment pricing; can add up |

| Azure Data Factory | Low/no-code pipelines, integration runtimes | Microsoft/SQL Server/Databricks ecosystems | Rich Azure connectors, GUI + code options | Per-activity/run pricing; complex at high freq. |

| Prefect (OSS + Cloud) | Python-first flows, hybrid execution, modern UI | Dev teams wanting OSS or managed hybrid control plane | Developer-friendly, flexible self-host or managed | Free OSS; Cloud tiers add features & limits |

| Dagster & Dagster+ | Asset-oriented graphs, lineage, observability | Teams focused on assets, lineage and governance | Strong ergonomics, catalog & observability | Credit-based managed pricing; starter tiers exist |

| Argo Workflows | Kubernetes-native CRDs, container tasks, scale | Kubernetes/ML/data teams running container workloads | k8s-native scale, cloud-agnostic, OSS community | Free OSS; requires k8s infra and ops costs |

| Snowflake Tasks / Workflows | Serverless tasks, SQL/Stored Proc orchestration | Pipelines primarily running inside Snowflake | Orchestrates in-platform, tight Snowpark coupling | Billed via Snowflake consumption model |

| BMC Control‑M | Enterprise workflow orchestration, SLA/Gov | Large enterprises needing governance and DR | Mature connectors, SLA management, 24x7 support | Enterprise pricing; higher total cost |

| IBM DataStage | ETL/ELT design, metadata, data quality | Large governed orgs, hybrid/multicloud deployments | Feature-rich for complex integrations at scale | Higher cost; typically enterprise licensing |

| AWS Marketplace (listings) | Procurement portal for managed offerings | AWS procurement teams and consolidated billing | Consolidated billing, private offers, quick procurement | Pricing varies by listing; vendor-dependent |

Making the Right Choice for Your Team’s Symphony

We’ve journeyed through a landscape of powerful platforms, from the open-source titan Apache Airflow to modern, developer-friendly tools like Prefect and Dagster. The key takeaway? There is no single “best” data orchestration tool for everyone. The right choice is a deeply personal one, shaped by your team’s unique DNA, existing tech stack, and strategic goals.

Choosing your orchestrator is like selecting a conductor for your data orchestra. It’s not just about picking the most famous name; it’s about finding the one whose style, expertise, and approach will bring out the best in your ensemble of data sources, transformations, and destinations.

Key Takeaways and Final Considerations

As you weigh your options, revisit the core evaluation criteria we discussed. Don’t get distracted by shiny features; focus on what truly moves the needle for your team’s productivity and your data’s reliability.

Here are the most critical factors to guide your final decision:

- Team Skills & Ergonomics: Does your team live and breathe Python? Prefect or Dagster might feel like a natural fit. Are you more comfortable with a low-code, GUI-driven approach? Azure Data Factory or a managed service with a strong UI could be the winner. The best tool is one your team will actually enjoy using.

- Infrastructure & Ecosystem: Your existing cloud provider plays a huge role. If you’re all-in on AWS, exploring MWAA first makes perfect sense. Similarly, Google Cloud Composer is a no-brainer for GCP shops. Aligning your orchestrator with your cloud environment simplifies security, billing, and integration.

- Scale & Complexity: A small RevOps team syncing a few key objects from Salesforce might not need the heavyweight power of a self-hosted Airflow cluster. A simple Snowflake Task graph could be sufficient. Conversely, an enterprise managing hundreds of interdependent dbt models and real-time data feeds needs the robust dependency management and observability of a full-featured platform.

- Total Cost of Ownership (TCO): Remember to look beyond the sticker price. Open-source tools are “free” like a puppy is free. You must account for the ongoing cost of infrastructure, maintenance, upgrades, and the valuable engineering hours required to keep it all running smoothly. Managed services often provide a much more predictable and ultimately lower TCO.

Your Actionable Next Steps

Feeling overwhelmed? That’s completely normal. The world of data orchestration is complex. To move forward with confidence, break the process down into manageable steps.

- Shortlist Your Top 3: Based on the criteria above, pick three tools that seem like the best potential fit.

- Run a Proof of Concept (POC): Don’t just read documentation. Get your hands dirty. Task a small team with building a single, representative pipeline in each of your top three choices. This could be a common RevOps challenge like syncing Salesforce Account and Opportunity data to Snowflake.

- Evaluate the Experience: During the POC, assess everything. How long did it take to get started? How was the developer experience? How intuitive is the UI for monitoring and debugging? Was it easy to implement retries and alerting?

This hands-on approach will reveal nuances and frustrations that you’d never find in a feature matrix. It transforms an abstract comparison into a concrete, experience-based decision. Ultimately, the best data orchestration tools are the ones that fade into the background, reliably empowering your team to turn raw data into strategic insights without the operational headache.

Tired of weighing infrastructure costs and managing complex DAGs? If your goal is to get reliable, actionable data from your go-to-market systems without building an in-house data engineering team, RevOps JET can help. We provide a fully managed data orchestration and engineering service for a fixed monthly fee, letting you focus on revenue strategy, not pipeline maintenance. Learn more about RevOps JET and see how we can build your data symphony for you.