Picture this: your go-to-market team is in the middle of a high-stakes race, but their engine is sputtering and stalling. Why? Because it’s running on dirty fuel. That’s exactly what bad data does to your business. It’s not some minor inconvenience; it’s a silent growth killer that grinds your momentum to a halt and quietly drains your resources.

The real cost of bad data isn’t just an abstract number on a spreadsheet—it’s a direct, painful hit to your bottom line.

Your Go-To-Market Engine Is Sputtering Heres Why

Think of bad data as the sludge clogging up the gears of your revenue engine. For B2B SaaS companies, this isn’t a theoretical problem. It shows up as tangible, everyday friction that undermines every single strategic move you try to make.

It’s the SDR who spends half their day chasing phantom leads because of duplicate contacts and outdated information in the CRM. It’s the marketing team launching a brilliant campaign that completely flops because it’s targeting an audience based on faulty firmographics. These aren’t just isolated incidents; they’re symptoms of a much deeper, systemic issue.

This disconnect between strategy and execution creates a massive ripple effect. When executives gather for quarterly planning, they’re staring at forecasts they can’t trust, making it impossible to allocate resources effectively or set realistic growth targets.

The financial toll of this operational chaos is genuinely staggering. Research shows that poor data quality costs organizations an average of $12.9 million annually. That’s not just a headline-grabbing statistic; it represents real, quantifiable losses from inaccurate sales projections, missed opportunities, and operational costs that spiral as teams scramble to manually fix endless errors. You can dig deeper into the full research about data quality’s financial impact to see just how widespread the problem is.

Before we dive into formulas and ROI, let’s look at where these costs are actually coming from. This table breaks down the key areas where bad data is silently eating away at your profits.

The Hidden Costs of Bad Data A Quick Overview

| Impact Area | Direct Consequence | Example in B2B SaaS |

|---|---|---|

| Lost Revenue | Missed sales opportunities from inaccurate or incomplete leads. | High-potential leads are never contacted because they lack a phone number or are routed to the wrong sales territory. |

| Customer Churn | Poor customer experience due to fragmented communication. | A renewal is missed because the billing contact information in your CRM is six months out of date. |

| Wasted Time | Sales and marketing teams manually cleaning and verifying data. | An SDR spends 10-15 hours a week cross-referencing LinkedIn to validate contacts instead of actually selling. |

| Flawed Analytics | Unreliable reports leading to poor strategic decisions. | The C-suite invests heavily in a new market segment based on flawed data, only to find the ICP was completely wrong. |

| Compliance Fines | Violating data privacy regulations like GDPR or CCPA. | Sending marketing emails to contacts who have opted out because the unsubscribe request was never properly synced. |

| Engineering Rework | Development teams building fixes for data-related issues. | Engineers spend a full sprint building custom data connectors because the marketing automation tool can’t read the CRM data. |

As you can see, the problem goes far beyond a messy CRM. It’s a foundational weakness that compromises every part of your go-to-market motion.

The Anatomy of a Data-Driven Breakdown

To really get a feel for the cost of bad data quality, let’s walk through how it typically unravels in a B2B SaaS company. The issues always seem small at first, but they compound with terrifying speed.

It often starts with something as simple as inconsistent data entry. One sales rep enters “United States,” another types “USA,” and a third puts “U.S.” This tiny variance immediately fractures your customer profiles, turning segmentation and reporting into a complete nightmare.

This single issue quickly spirals into bigger problems:

- Duplicate Contacts: Multiple reps start unknowingly engaging the same prospect, which creates a disjointed and deeply unprofessional customer experience.

- Outdated Information: A key contact leaves a target account, but the CRM record isn’t updated for six months. Your team wastes precious time and resources trying to connect with a ghost.

- Incomplete Profiles: Critical fields needed for lead scoring and territory assignment are left blank, causing high-value leads to slip right through the cracks, unseen.

These foundational cracks weaken every single one of your go-to-market motions. Your expensive marketing automation platform can’t personalize anything effectively, and your sales engagement tools just end up pushing irrelevant messages. This is where understanding how data-driven marketing solutions can turn raw information into a real competitive advantage becomes absolutely critical.

But before you can quantify the damage and build a case for an engineered solution, you have to be able to spot these symptoms in your own operations.

The Five Core Financial Drains of Poor Data Quality

Bad data isn’t just a nuisance; it’s a quiet killer of growth. Think of it less as a single problem and more as a leaky pipe, with the costs dripping into every corner of your business. To really grasp the damage, you have to look beyond a messy CRM and see how it hits your bottom line.

Let’s break down the five most common ways bad data is costing you money right now.

1. Lost Revenue and Customer Churn

This is the most direct and painful hit. When your sales team is working from a CRM filled with junk—outdated phone numbers, incorrect titles, missing contacts—they’re flying blind. Promising leads get lost in the shuffle, upsell opportunities are completely missed, and reps waste time chasing ghosts.

The result? Deals die before they even enter the pipeline.

It also actively pushes your existing customers away. Imagine a loyal customer getting an invoice addressed to the wrong person or being hounded by three different reps for the same renewal. It screams chaos. That erosion of trust is a one-way ticket to churn.

2. Wasted Go-To-Market Team Hours

Your go-to-market (GTM) team is one of your biggest investments, and bad data forces them to burn their most precious resource: time. Picture your sales development reps spending 25% of their week just bouncing between their CRM and LinkedIn to verify if a contact still works at a company. That’s not selling; it’s manual data entry.

It plays out every single day:

- An SDR finds a great-looking lead in the system.

- The email bounces.

- They spend the next 20 minutes digging around for the right contact info.

- Finally, they can start their actual outreach.

Multiply that little time-suck across your entire team, and the operational cost becomes staggering. You can explore more about these business risks of poor data quality to see the full financial and operational picture.

3. Flawed Analytics and Poor Decisions

Ever heard the phrase “garbage in, garbage out”? When it comes to your data, it’s the gospel truth. If your source data is a mess, your analytics dashboards are nothing more than expensive works of fiction.

This is where the real danger lies. Leaders start making huge strategic bets—like launching a new product or expanding into a new market—based on a completely warped view of reality. The problem is rampant: a shocking 70% of organizations admit they can’t make sound strategic moves because they don’t trust their own customer data.

This creates a terrible cycle. Instead of using data to seize opportunities, leadership meetings devolve into arguments about whether the numbers on the screen are even right.

When leadership can’t trust the numbers, they revert to gut feelings and anecdotal evidence. This not only derails a data-driven culture but also puts the entire company’s growth strategy on unstable ground.

4. Compliance Fines and Reputational Risk

In an era of GDPR and CCPA, a simple data mistake can have serious financial blowback. Failing to sync an “unsubscribe” request across all your systems isn’t just a clumsy error—it’s a compliance violation that can trigger thousands of dollars in fines.

But the financial penalty is often just the beginning. The reputational damage is far worse. When a customer’s data preferences are ignored, or their information is mishandled, you’ve broken their trust. That’s a stain on your brand that is incredibly difficult to wash out and can cost you far more than any one-time fine.

5. Technical Debt and Engineering Rework

Finally, there’s the hidden tax that your technical teams are forced to pay. When data is a tangled, inconsistent mess, your engineers and analysts are stuck doing “data janitor” work. Instead of building innovative features, they’re patching together brittle, one-off fixes for broken data pipelines.

This is how technical debt spirals out of control. Each quick fix just adds another layer of complexity, making the whole system more fragile and likely to break again.

This rework often looks like:

- Writing complex scripts just to normalize state fields (e.g., turning “CA,” “Calif.,” and “California” into one standard).

- Manually de-duplicating records before they can be loaded into the data warehouse.

- Building custom connectors because your martech tools can’t make sense of the fragmented data in your CRM.

Every hour an engineer spends cleaning up data is an hour they aren’t spending on innovation. It’s a silent drag on your product roadmap, keeping your most talented people from doing the work that actually moves the needle.

How to Calculate the Financial Impact on Your Business

Talking about “messy data” is one thing, but getting leadership to invest in a solution requires speaking their language. That language is money.

The key is to move from abstract problems to hard numbers. Instead of saying, “Our data hygiene is poor,” you can confidently state, “Our messy data cost us over $150,000 last quarter in wasted sales rep time alone.” Now that gets attention.

This section gives you some simple, back-of-the-napkin formulas to help you quantify the real cost of bad data quality in your B2B SaaS company. These aren’t complex academic models, but they are powerful enough to ground your conversations in financial reality.

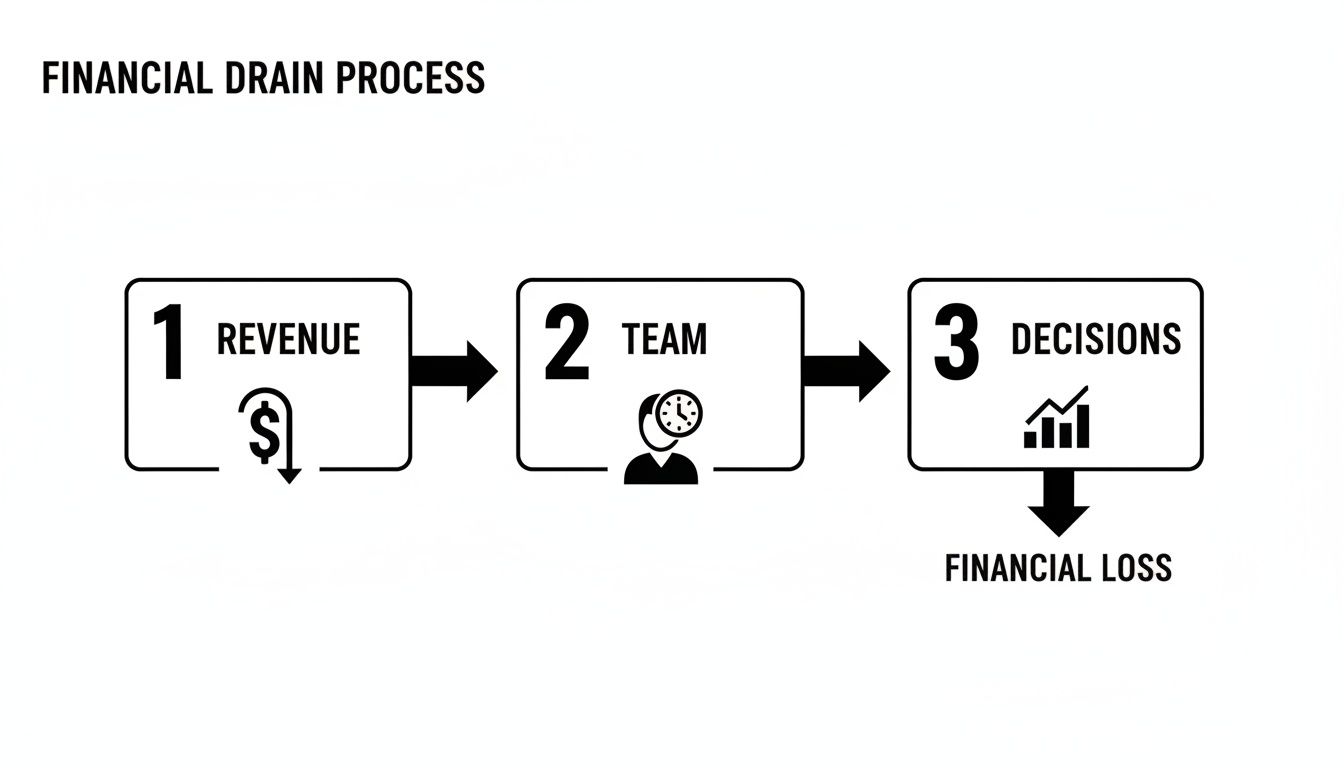

Bad data acts like a constant drain on your resources, siphoning away revenue, wasting your team’s precious time, and leading to poor strategic decisions.

As you can see, these problems don’t happen in a vacuum. They feed into each other, creating a vicious cycle of inefficiency that hits your bottom line, hard. Let’s break down exactly how to calculate the cost for each of these areas.

Formulas to Quantify Your Data Quality Costs

Use these simple formulas to estimate the financial impact of common data quality issues within your RevOps and GTM teams.

| Cost Metric | Formula | Variables Explained |

|---|---|---|

| Wasted SDR/BDR Time | (Avg Salary / 2,080) x (Hours Wasted/Wk x 52) x # of Reps | 2,080 is the standard number of annual work hours. This calculates the direct salary cost of non-selling activities. |

| Inaccurate Forecasting | |Forecasted Revenue - Actual Revenue| x (1 + Cost of Capital) | The Cost of Capital is the return investors expect. This quantifies the financial impact of misallocating resources based on bad projections. |

| Customer Churn | # of Customers Churned (Data-Related) x Avg ARR | ARR is Annual Recurring Revenue. This calculates the direct top-line revenue lost from service failures caused by data errors. |

These formulas are a great starting point for building a business case. They turn a fuzzy “data problem” into a clear financial liability that demands a solution.

Calculating the Cost of Wasted SDR Time

Your Sales Development Representative (SDR) team is one of your most expensive resources, and their time is gold. Every minute they spend manually verifying leads, hunting for the right contact info, or dealing with bounced emails is a minute they aren’t selling. You’re literally paying them for data entry.

Here’s a straightforward way to calculate this cost:

Example Calculation: Let’s say you have a team of 10 SDRs, each earning an average salary of $75,000. After talking to them, you find they spend about 8 hours per week—an entire workday—grappling with bad data.

- Hourly Rate:

$75,000 / 2,080 = $36.06 - Annual Wasted Cost per SDR:

$36.06 x (8 hours/week x 52 weeks) = $14,997 - Total Team Cost:

$14,997 x 10 SDRs = $149,970 per year

That’s nearly $150,000 a year flushed away on activities that generate zero pipeline. It’s a powerful number to bring to your next leadership meeting.

The real cost here isn’t just the salary. It’s the opportunity cost of the meetings that weren’t booked and the deals that never happened because your top-of-funnel team was bogged down in manual, low-value work.

Calculating the Cost of Inaccurate Forecasting

When your CRM is a minefield of duplicate opportunities, wrong deal stages, and wishful-thinking close dates, forecasting becomes more like astrology than science. That gap between your forecast and what you actually bring in isn’t just an annoyance; it leads to terrible resource allocation, missed targets, and a loss of credibility with your board.

Example Calculation: Imagine your team forecasted $5 million in new ARR for the quarter but only managed to close $4.2 million. That’s a variance of $800,000. Let’s say your company’s cost of capital (the return expected by investors) is 10%.

- Forecast Variance:

$5,000,000 - $4,200,000 = $800,000 - Cost of Inaccuracy:

$800,000 x (1 + 0.10) = $880,000

This calculation shows that the $800,000 miss has an implied cost of $880,000 once you factor in the capital that was tied up or misallocated based on that faulty forecast.

Calculating the Cost of Customer Churn from Bad Data

Finally, let’s draw a direct line from bad data to customer churn. It happens more than you think. A renewal notice gets sent to an employee who left six months ago. A critical support ticket gets lost because of a duplicate account record. These aren’t just mistakes; they’re relationship-killers.

Example Calculation: Let’s say a post-mortem analysis reveals that 5 customers churned last year for reasons that trace directly back to data-driven service failures. If your average customer ARR is $50,000, the math is painfully simple.

- Revenue Lost:

5 customers x $50,000 ARR = $250,000

This $250,000 in lost recurring revenue is a direct hit to your company’s health. By putting real numbers to these problems, you can transform a vague “data issue” into a rock-solid business case for investing in a proper data foundation.

Measuring Data Health with Actionable KPIs

Calculating the financial damage from past mistakes is a great way to get leadership’s attention. But let’s be honest, an effective RevOps engine doesn’t just clean up yesterday’s messes; it prevents tomorrow’s. To get there, you need to stop being reactive and start being proactive. After all, you can’t fix what you can’t measure.

This means treating your data like the critical business asset it is. Just like you obsess over pipeline velocity or customer acquisition cost, you need to consistently track the health of the data that fuels those numbers. This is how you turn data management from a dreaded cleanup chore into a real strategic advantage.

The Four Pillars of Data Health

You don’t need a hundred different metrics to get a handle on your data quality. The reality is, you can get a comprehensive, at-a-glance view by focusing on four core pillars. Think of these KPIs as your early-warning system—they’ll flag problems before they snowball into expensive business disasters.

Here are the essential data quality KPIs every RevOps leader should have on their dashboard:

- Data Completeness: What percentage of your records have all the critical fields filled out? An incomplete record—a lead missing a phone number or an account without an industry tag—is a dead end for segmentation, routing, and outreach.

- Data Accuracy: Is the information in your database actually correct? This one’s a bit tougher to measure, but it’s arguably the most important. Inaccurate data leads directly to flawed strategies and completely wasted effort.

- Data Uniqueness: How many duplicate records are lurking in your system? Duplicates are the silent killer of efficiency, creating fragmented customer views, causing reps to step on each other’s toes, and completely skewing your reports.

- Data Timeliness: How fresh is your data? A contact’s job title from two years ago isn’t just inaccurate—it’s useless. Worse, using it makes your team look unprofessional and out of touch.

Just keeping an eye on these four pillars is the first real step toward building a culture of data excellence.

Ignoring these KPIs isn’t just a minor oversight; it’s a financial drain. Poor data quality in RevOps doesn’t just frustrate your teams; it hemorrhages profits. Some estimates suggest a shocking 20-30% of enterprise revenue is lost to simple inefficiencies like fragmented records and poor governance. B2B SaaS companies feel this pain most acutely as they try to scale on top of a shaky data foundation.

It gets worse. Data teams often burn 50% of their time just fixing these preventable issues, from de-duping records to manually correcting the 47% of new entries that contain critical errors. Those mistakes then cascade into broken lead scoring and territory logic, crippling the entire go-to-market engine.

How to Measure These KPIs

The good news is you don’t need an army of analysts to start tracking these metrics. You can get started right now by building some simple reports in your CRM or data warehouse.

A Practical Measurement Framework:

| KPI | How to Measure in Your CRM/Warehouse | Why It Matters |

|---|---|---|

| Completeness | Create a report showing the percentage of contacts where Phone, Title, and Industry are not blank. Set a benchmark, like 95% completion. | If this score is low, your segmentation is broken, lead routing is failing, and your SDRs are wasting hours on manual research. |

| Accuracy | Use a third-party data enrichment tool to validate a sample of 1,000 emails. Your accuracy score is the percentage that comes back as valid. | An email accuracy score below 90% means your marketing campaigns are tanking and your sender reputation is at risk. |

| Uniqueness | Run a duplicate rule report in your CRM. Calculate your duplicate rate with: (Duplicate Records / Total Records) * 100. | A duplication rate above 5% is a massive red flag. It points to wasted sales efforts and a confusing customer experience. |

| Timeliness | Calculate the percentage of records modified or verified in the last 6 months: (Records Updated in Last 6 Months / Total Records) * 100. | A low timeliness score means your team is flying blind with stale information, leading to irrelevant outreach and missed opportunities. |

By setting these benchmarks and tracking them consistently, you can finally prove the ROI of your data initiatives and build a rock-solid case for investing in an engineered data foundation. This kind of disciplined tracking is a cornerstone of any serious data strategy. To go a level deeper on this, check out our guide on what is data observability.

Moving from Quick Fixes to an Engineered Foundation

Realizing that bad data is costing you money is the easy part. The real challenge is breaking the endless cycle of cleanup. So many teams fall into the trap of buying yet another data-cleansing tool, hoping it’s the silver bullet. But while those tools might offer some temporary relief, they rarely fix the root cause. You end up stuck in a reactive loop, constantly fixing problems instead of preventing them.

A real, lasting solution demands a fundamental shift in thinking. It’s about moving away from temporary fixes and toward building a resilient, engineered data foundation. This isn’t about more manual cleanup; it’s about architecting systems that stop bad data at the source. Think of it as the difference between constantly patching a leaky roof and building a new one designed to withstand any storm.

This engineering-led approach tackles the core architectural flaws that let bad data seep into your systems in the first place. It means treating your CRM and data pipelines with the same discipline and rigor you’d apply to your core product.

Architecting a Scalable and Resilient CRM

Your CRM shouldn’t be a chaotic free-for-all where good data goes to die. It needs to be a fortress, meticulously designed to protect data integrity from the moment a record is created. A truly engineered CRM foundation is built on prevention, not just correction.

This starts with something as simple as establishing strict validation rules. For example, instead of allowing free-text entry for a “State” field, you implement a dropdown list. This one change completely eliminates the inevitable inconsistencies like “CA,” “Calif,” and “California” that wreak havoc on territory assignments and reporting.

Beyond basic validation, a truly scalable architecture includes:

- Thoughtful Schema Design: You need custom objects and fields that map directly to your business processes. This ensures there’s a logical home for every critical piece of information.

- Automated Data Governance: Use code to enforce your rules, like automatically preventing a deal from moving to the next stage if key fields are missing.

- Robust User Permissions: Carefully limit who can create or modify certain records. This drastically reduces the risk of accidental errors from well-meaning team members.

These aren’t just admin settings; they are foundational engineering choices that create a system that is clean by design. To truly get ahead of the problem, you have to adopt essential database management best practices.

Building Reliable, Production-Grade Data Pipelines

Once your CRM is structured for success, the next battleground is ensuring data flows reliably between all your systems. That connection between your CRM and your data warehouse is often a primary source of data decay. Manual CSV uploads or brittle, point-and-click connectors are just ticking time bombs waiting to fail.

An engineered approach replaces these fragile links with production-grade data pipelines. These are automated, monitored systems built with code and designed for total resilience and transparency.

A production-grade pipeline isn’t just about moving data from point A to point B. It’s about ensuring that data arrives accurately, on time, and with its full context intact. It includes automated error handling, retry logic for temporary API failures, and proactive monitoring that alerts your team the moment an issue arises—often before downstream users even notice.

This means your analytics team is always working with fresh, trustworthy data. Dashboards become reliable, forecasts are accurate, and your leadership team can finally make decisions with confidence. For a deeper dive, check out these data integration best practices that are core to any modern data stack.

Using Code for Complex Normalization and Transformation

Let’s be honest: some data challenges are just too complex for out-of-the-box tools. Normalizing company names or standardizing thousands of job titles across your entire database requires sophisticated logic. Trying to solve this with manual cleanup is a losing battle you’ll fight every single week.

This is where custom code becomes your most powerful asset. An engineer can write a Python script or a dbt model to handle these complex transformations with absolute precision and consistency.

A Practical Example: The Code-Driven Solution Imagine you need to categorize leads into specific buyer personas based on their job titles.

- The Problem: You have thousands of messy variations like “VP of Sales,” “Sales VP,” “Vice President, Sales,” and “Head of Sales.”

- The Quick Fix: A RevOps analyst spends days wrestling with complex spreadsheet formulas to manually categorize them. It’s slow, error-prone, and has to be repeated every single month.

- The Engineered Solution: An engineer writes a single, reusable SQL transformation. This code automatically standardizes all those title variations into a clean “Sales Leadership” persona every time the data pipeline runs.

This approach transforms a recurring headache into a maintainable asset. The code is version-controlled, documented, and can be easily updated as your business rules change. It’s a solution that actually scales, saving hundreds of hours and eliminating a major source of the high cost of bad data quality for good.

A Few Common Questions We Hear About Data Quality Costs

Even after seeing the numbers, figuring out where to start can feel like a huge task. I’ve seen countless RevOps leaders and founders get stuck here, trying to move from constant firefighting to building something solid. It’s a common hurdle.

Let’s break down some of the questions that are probably running through your head. These aren’t abstract theories—they’re practical answers to help you start making a real difference, even if you’re tight on resources.

What’s a Realistic First Step on a Small Budget?

You don’t need a six-figure check or a massive project to get started. Honestly, the best way to begin is to think small and targeted. Forget trying to boil the ocean. Instead, zero in on the data that has the biggest impact on your go-to-market motion right now.

Start by picking just three data fields that are absolutely critical for your sales and marketing teams. This is usually stuff like Job Title, Industry, and a direct Phone Number. A single mistake in one of these fields can completely torpedo a sales play or an entire marketing campaign.

Once you have your three fields, here’s what you do:

- Do a quick manual audit. Grab a sample of 100 recent leads and just check those three fields for accuracy and completeness. This little exercise will give you a surprisingly clear picture of how big your problem is.

- Focus your cleanup efforts. Don’t try to clean your whole database. Just spend a few hours a week tidying up these specific fields on your highest-value accounts. That way, your effort is directly tied to potential revenue.

- Set up some simple guardrails. Make small tweaks in your CRM, like changing the

Industryfield from a free-text box to a required picklist. It’s a low-effort change that prevents a ton of future errors.

This approach gives you some quick, tangible wins you can show off to justify a bigger investment down the road.

How Do I Get Sales and Marketing to Actually Care About This?

Getting your GTM teams on board isn’t about giving them a lecture on data hygiene. It’s about showing them what’s in it for them. Salespeople and marketers are driven by results—commissions, pipeline, hitting their MQL number. You have to connect the dots between data quality and those outcomes.

Frame the conversation around their own success. For a sales rep, clean data isn’t just about a tidy CRM; it’s about spending less time on mind-numbing grunt work and more time actually closing deals.

Show them the math. A simple calculation like, “Every hour you spend fixing bad data costs you $X in potential commission,” makes the problem hit home. When they realize bad data is literally taking money out of their pocket, their perspective tends to change fast.

For your marketing team, you can prove how accurate data makes their campaigns better. Show them how a clean, properly segmented list leads directly to higher open rates, better engagement, and more qualified leads for sales. Once they see the clear line between data quality and hitting their MQL targets, they’ll become your biggest allies.

Can’t We Just Buy a Tool to Fix This?

This is probably the biggest misconception out there. Data enrichment and cleaning tools can be incredibly helpful, but they are not a silver bullet. Buying a tool without fixing your underlying processes is like mopping the floor while a pipe is still leaking. You’re just treating the symptom, not the cause.

Tools are great for a one-time scrub or periodic cleanups, but they can’t stop bad data from getting into your systems in the first place. They don’t fix broken integrations, sloppy data entry habits, or a poorly designed CRM. Real, lasting improvement comes from a combination of your processes, your people, and your tech stack.

A sustainable solution needs a solid engineering foundation:

- Strong Processes: You need clear rules for data entry and governance.

- Engineered Systems: You need to build reliable, automated data pipelines that stop errors at the source.

- The Right Tools: Software should support your strong foundation, not act as a band-aid for a broken one.

Without that solid engineering and a well-defined process, even the fanciest tool will only give you a temporary fix.

At its core, bad data is an engineering problem, and it requires an engineering solution. If your team is stuck in a reactive loop, spending more time fixing data than creating value, RevOps JET can help. We provide on-demand technical revenue operations engineering to build the resilient data foundation you need—all for a fixed monthly fee. Stop cleaning and start building with RevOps JET.